Lecture 04

Reading material: Section 2.2 & 2.4 of the textbook.

Recap

Last time we discussed the basic form of sinusoidal waveforms, some elementary transformations that can be performed on signals, derivation of Euler's formula that relates trigonometry with sinusoids and complex numbers as well as two main approaches to modeling of biological systems. Today we will continue discussing various methods of characterizing and analyzing statistics of signals as well as some specific waveforms commonly encountered in analysis.

Mean, variance, root-mean-square, etc.

This time we start discussing some ways to characterize the signals we will see in our work. The primary method of doing this will be by computing certain statistical properties and values of the signals. Consider the following to signals that show markedly different behaviors.

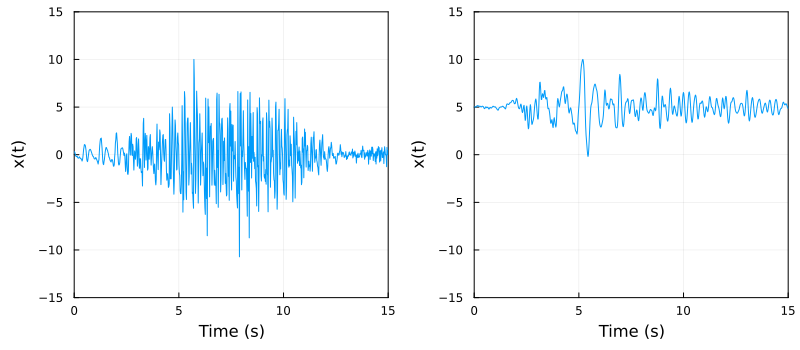

Consider the example of two signals shown in figure below:

The two waveforms above display markedly different properties and behaviors.

The obvious difference is that one is oscillating around zero while the other is oscillating around the value 5. We could eliminate this difference by performing an elementary operation (-axis shift) but closer inspection reveals that more is different: the one on the left seems to have "more energy" while the one on the right seems to be more "subdued".

Two properties that characterize these differences are the root-mean-square value (a.k.a RMS value) and variance both of which we can contrast with the mean. Recall the usual definitions.

Mean

For the mean value, we have two roughly equivalent definitions depending on whether the signal involved is discrete time or continuous time signals.

While the mean characterizes the average value of a signal over its period of observation, the amount of fluctuation is measured by a term called variance defined as follows.

Variance & standard deviation

The square root of the variance is defined to be the standard deviation . Then it is easy to see that while the mean and standard deviation preserve the original units, the variance does not.

Root Mean Square

A quantity related to standard deviation is the root-mean-square value of a signal which has its origins in voltage and power calculations. This quantity is defined as:

Traditionally when a signal was represented using a fluctuating voltage, the RMS value represented the constant voltage that would achieve the same power dissipation as the fluctuating one.

Thus the standard deviation is a term characterizing fluctuation about the mean whereas the RMS value is more of a statement about the essential magnitude of the signal.

The following table lists the RMS values of some periodic oscillatory waveforms with characteristic amplitude (i.e. the maximum and minimum amplitude is ). In the case of periodic signals, it suffices to use Eq. (3) over one period and for symmetric periodic signals, the interval of integration can be even shorter.

| Wave Type | RMS | Wave Type | RMS | Wave Type | RMS |

|---|---|---|---|---|---|

| Sine | Square | Triangle |

The deciBel (dB)

The decibel is a (rather arbitrary) "unit" used to compare the intensity or power level of a signal by comparing it against a reference signal on the logarithmic scale. Originally named after famed inventor Alexander Graham Bell, the Bel turned out to be too large unit to be useful and it is actually a tenth of its value (hence the "deci") that caught on. Given signal , when we compare it against a reference signal on the decibel scale, we express its intensity or amplitude as

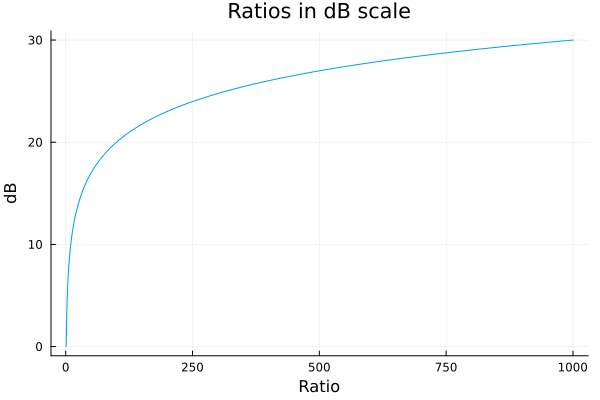

Defined as above the quantity has no units and represents a logarithm of a dimensionless ratio. A typical use then is to describe the signal-to-noise-ratio (SNR) of a signal as we will see in the following section. The logarithmic scale is useful when we want to express a wide range of values on the same graph and also has the added benefit of turning multiplication to addition in log units.

On the other hand, when decibels are used to characterize a single signal (taking reference ) then the units involved are written as dB Volts, dB dynes etc. to indicate this is the case. The figure below is a plot showing what ratios translate to decibels on the -axis. Here we can see that if is 100 times this translates to 20 dB whereas if is 1000 times the value is 30 dB.

In situations involving power calculations (where commonly a square term is involved) the decibel becomes

Signal to noise ratio (SNR)

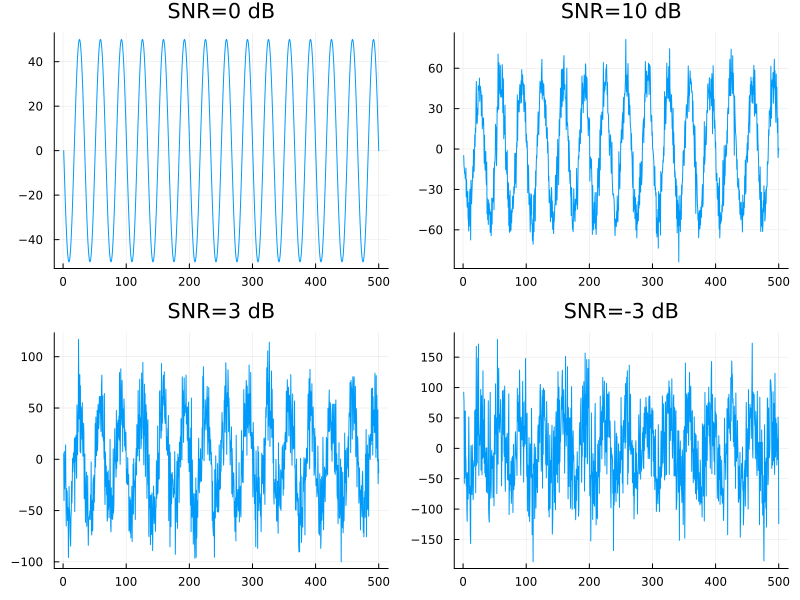

The majority of waveforms are a mixture of signal and noise. Signal and noise are relative concepts, and depend on the work at hand: the signal is what you want from the waveform, whilst the noise is everything else. Therefore it is useful to characterize the level of each when analyzing a signal and thus SNR is defined as:

To develop intuition for SNR values, the following figure shows a noisy sinusoid of 30 Hz and 50 units amplitude at different levels of SNR.

Some common waveforms

We already saw examples of sine, square and triangle waves in the figures from Lecture 2. Here we discuss a few more along with the mathematical notations used to represent them.

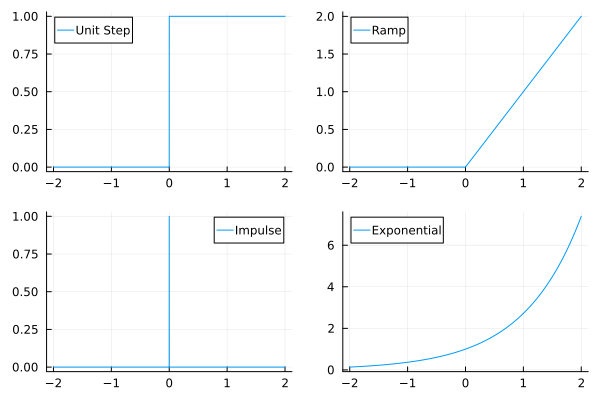

Step signal

One of the simplest conceivable signals is one that is zero until a certain time and then takes on a constant value , after . We call such signals step signals. Mathematically we can write this as:

Since we are familiar with amplitude scalings time-axis shifts, it simplifies matters to write everything in terms of the unit step function :

Impulse signal

A special analytical (and idealized) signal defined and used for its ability to simplify concepts is the so-called impulse function defined by the following heuristic[1]. The impulse function or Dirac delta function is one which satisfies

subject to the condition:

While it is not immediately obvious why such an abstraction should be useful, it might be useful to think about what the derivative of above should be. We will see concrete examples of how the impulse function is used to simplify modeling in future lectures.

Ramp signal

Speaking of derivatives of the unit step function; one is naturally motivated to think of what it's integral should be as well. The ramp function is mathematically defined as:

Exponential signal

While we have seen the exponential function in the context of Lecture 03, when talking of derivatives and integrals, the exponential function has yet another characterization as the function whose derivative is itself! Let's see this in action.

Actually more is true: the requirement is that any -th derivative . Thus this must be an infinitely differentiable function admitting a Taylor series where the usually depend on successive derivatives but now are related via .

Differentiating the Taylor expansion once we get that a relationship must hold between the ; namely, _____ . For example, with we get and we get . A little recursion then gives that . Thus the Taylor expansion becomes:

But the right hand side infinite sum should be familiar from calculus!. Thus we get the functions are functions whose derivative satisfy with being defined by .

____ - Homework material.

Moreover, this characterization is unique; that is (upto some caveats) are the only functions that equal their derivatives.

You will show in your homework the assumption leads to a contradiction. 😊

The figure below shows the three signals we have discussed so far:

Correlation & covariance

Given two signals, one natural question to ask is how similar are the signals? Can we associate some number or measure to a signal or a pair of signals that can tell us how similar they are? We have already defined the mean and variance for a signal. Thus one naturally asks if they are sufficient.

As the answer to the above exercise shows, it is possible to have drastically different and mismatched signals that share the same mean and standard deviation but differ elementwise. The problem here is the mean and variance are properties of a single signal whereas for similarity we need to look at both signals.

Enter correlation.

Correlation

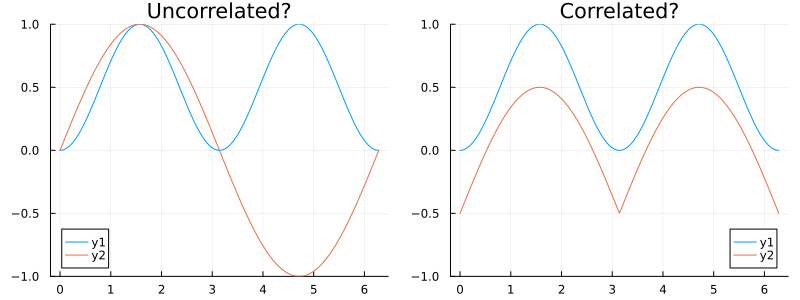

In words, two correlated signals exhibit behavior that seem in tandem with each other; i.e. they may increase or decrease at the same time. Consider the two figures:

It is clear that two signals on the right pane move in tandem while the situation is less clear for the pair on the left. Mathematically we capture this essential observation via the following definition: the Pearson correlation coefficient between two sampled signals and is given as

which is a quantity restricted to be between and with either value representing perfect linear anti-correlation or correlation and the middle value (zero) implying no correlation. Note the normalization by for sample statistics similar to the definition for variance.

Thus is the dot product of two unit vectors; and thus equal to the cosine of the "angle" (in whatever number of dimensions) between them; and thus restricted between and which then carries over to .

Covariance

When written absent the normalization factor, we get the covariance between two vectors:

Often people also use the term "correlation" to refer to an un-normalized correlation, especially in the context of continuous time signals:

Distance measures

While in the above we discussed correlation and covariance, which measure how much signals vary together, it turns out that it is quite useful to have a measure of "distance" (akin to real life Euclidean distance) between objects (in this case signals) of interest in whichever mathematical space we are working in. This then allows all sorts of maximization/minimization etc. Mathematically what we need is a function satisfying the following: given two signals,

for all and if and only if .

The last requirement is not obvious; but is a desirable property to have for a natural notion of distance (e.g. stopping at the grocery on the way home should naturally take more time than going straight home). Functions that do not satisfy all the above requirements are not called true metrics and are sometimes referred to as pseudo-metrics.

| [1] | It is a heuristic because a mathematically rigorous definition of the Dirac function necessitates the invocation of measure theory or the theory of distributions. |