Administrivia

Course Outline

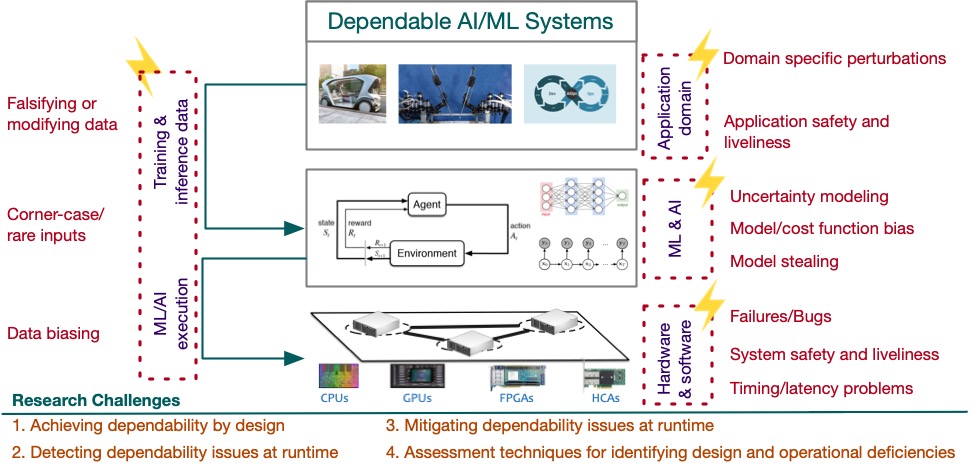

The emergence of AI systems and their ubiquitous adoption in automating tasks that involve humans in critical application domains (e.g., autonomous vehicles, medical assistants/devices, manufacturing, agriculture, and smart buildings) means that it is of paramount importance that we be able to place trust in these technologies. In a broad sense, a trustworthy AI system must be dependable (i.e., ensure safety, resilience, robustness, and security of its own and its operational environment) and reasonable (i.e., provide the reasoning behind produced decisions/actions). Indeed, the absence of such features not only makes people reluctant to deploy technology in the field despite successful demonstrations, but also leaves systems vulnerable to security hacks and crashes that ultimately impact human safety. As Schneir states, traditionally, computers have only outperformed humans at speed, scale, and scope, whereas humans excelled at thinking, reasoning, adapting, and understanding.

However, AI has changed the landscape, where computers can now infer relationships, discover patterns, react, and adapt to changes while keeping its strength in speed, scale, and scope. While AI-based applications long remained withheld for their high computational cost, recent advances in computing (high-speed network, big data storage, computation speed) has led to a new era of smart systems. Consequently, designing a dependable, trustworthy and interpretable system is an active area of research. In this course, we will discuss recent techniques for designing dependable and interpretable ML/AI, especially focusing on the safety, security, and reliability aspects of the emerging applications. Through innovative projects and research paper presentations, the student will learn the challenges and opportunities in designing and validating such ML-driven autonomous systems. This course will feature several guest lectures from domain experts who will demonstrate novel approaches being developed to make AI systems more reliable and trustworthy.

Prerequisites

Basic probability (ECE 313 or equivalent), machine learning (ECE 498DS or CS 446 or equivalent) and basic programming skills (such as Python or R or Matlab) are essential.

Evaluation

We will compute the final grade using the following table:

| Activity | Grade | Details |

|---|---|---|

| Paper Reviews | 10% | |

| Paper Presentation | 20% | |

| Class Participation | 20% | Group Discussion(5%) + Attendance(5%) + Paper Discussion Lead(10%) |

| Homework Assignments | 10% | Review and critique the guest lectures |

| Course Project | 40% | Proposal(5%) + Mid-term Report(10%) + Final Presentation and Report(25%) |

Credit Policy

- Student attendance in all lectures is required. Class participation, which includes in-class activities and group discussions, will be evaluated.

- Late submission policy: No late submissions allowed.

- While we encourage discussions, submitting identical material is not allowed and will incur appropriate penalties.

Paper Presentation & Reviews

Two papers will be presented during each session. Each paper will be presented by one student and another student will lead the post-presentation discussion. Students need to sign-up for presentation while the instructors will assign the discussion leads.

Students who are not presenting in that session are expected to write short reviews on Piazza for the papers being presented. Please try to engage in a discussion with your classmates instead of summarizing the paper.

- Description: 2 pages max. 1 paragraph on the core idea of the paper, followed by list of pros and cons of the approach, and any questions/criticisms/thoughts about the paper.

- Grading Criteria: Argumentative critique (Pros/Cons), Creative comments about addressing issues or improving the paper.

- Due: Night before the day of presentation at 10pm

- Sign-up: By 27 Aug (Friday) (Sign-up sheet)

- Description: 10-12 slides max (20 min for paper, 5 min critique, 5 min for questions). 2-3 slides on motivation and background. 3-5 slides on core ideas of the paper. 2-3 slides on experimental data. 3-5 slides on your thoughts/criticisms/questions/discussion points about the paper. Include slides summarizing Piazza discussion about paper.

- Due: 9 am on the day of the presentation (Upload on Canvas)

- Description: Lead the discussion with 3-4 key points analyzing and critiquing the paper (5 min critique, 5 min for questions).

Course Project

The final project is an open-ended research project that can target the design, development of dependable AI/ML systems and will deepen the class's understanding. This can include evaluation of AI/ML systems using analytical models or measurements, novel application of existing techniques in reliable and trustworthy AI to different scenarios or analysis of vulnerabilities using simulators and fault-injection methods. We will also provide a list of project topics for reference, but you are free to come up with your own ideas.

Students will form groups of two for the final project early in week 4. One member of each project team should signup the team on Piazza (link) by 22 Sep. We will also initiate a Piazza post to help you in team search. Failure to form project group by the deadline will lead to TA assigning the student to a group.

- Initial project proposal presentation (due starting of Oct, date to be announced)

- Mid-term Report: A short presentation to report on your initial progress including a critique of the literature.

- Final Presentation: Encompassing initial goals, results achieved, method/approach, major accomplishments.

- Final report

Some of the project topics from last year:

- Modelling fault-tolerant behavior of brain during neuronal injury in Alzheimer’s disease

- Network Traffic Management using Fair Decision-Making

- Program Assertions for Deep Neural Networks

- Fault-injection and performance assessment of UAVs

- Leveraging importance sampling for rare event simulation in autonomous driving

- International Conference on Dependable Systems and Networks (DSN)

- Neural Information Processing Systems (NeurIPS)

- International Conference on Machine Learning (ICML)

- International Conference on Computer Aided Verification (CAV)

- IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)

- Usenix Security

- IEEE Symposium on Security and Privacy