Lecture 05

Reading material: Section 2.2 & 2.4 of the textbook.

Recap

Last time we discussed some common types of signals, the decibel scale, as well as definitions of some common statistics related to signals (mean, variance, SNR, correlation etc.). Today we continue the same topics albeit with a bit more emphasis on implementation details and try to close out Chapter 2.

Correlations & orthogonality

Recall from last time the definition we had for Pearson correlation:

The quantity in the numerator after the second equality is often written as

and called the inner product between two vectors. We say two vectors are orthogonal if their inner product is zero.

Orthogonality of vectors and signals are important concepts that will show up later. However, for now it suffices to understand why the term orthogonality shows up. Let be a vector on the plane. The animation below shows this vector along with the construction: . Note that !.

This concept extends to higher dimensions as well as to continuous time signals[1]. Note that the choice of inner product can change depending on the type of "vector" as long as it satisfies some mathematical properties. For example, in the below exercise, we make use of an inner product defined via integration for continuous time signals.

which evaluates to

As we will see later, the above result is the cornerstone of the Fourier series and expansion that we will see in later chapters. For now we will leave the reader to work out the following.

Hint: Note that can be written as:

and something similar applies to as well.

The problem with correlation

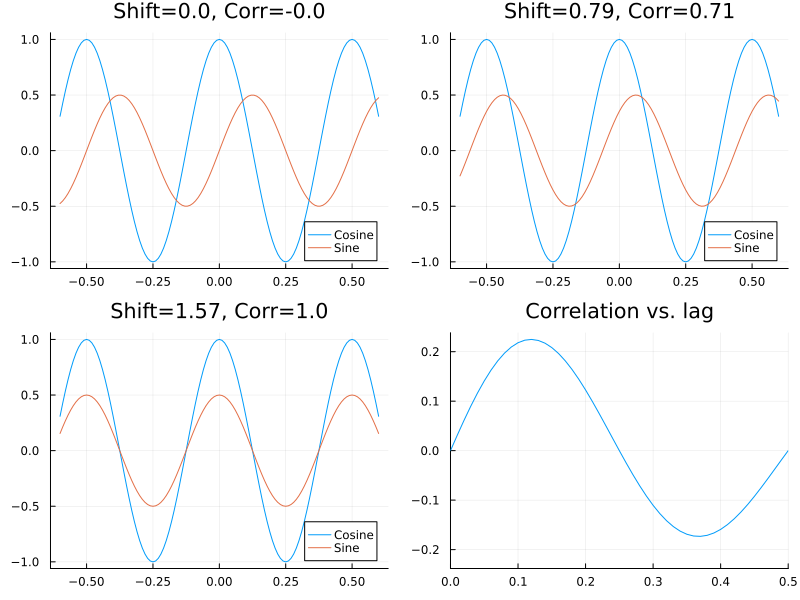

We will revisit the topic of orthogonality of sines & cosines later; but for now we are faced with a problem. Given an unknown signal , if we compute its correlation with a reference , the value of the correlation can be zero even if the waveforms are identical (consider and its time shift: which is orthogonal to it). Thus, we might get that the correlation between a signal and its own time shift might be zero as illustrated in the below figure!

As we can see in the above figure we have a sine wave and a cosine wave of 2 Hz frequency with the panels showing the sine wave shifted by and units and yielding different levels of correlation with the reference cosine wave.

To get around this limitation, it is common to perform correlations between a signal and all possible shifts of the reference and then use the maximal correlation found. The shifts involved are often called lags and the procedure of computing correlation between and lagged versions of commonly called cross correlating. Mathematically, for each lag (or lag variable in continuous time) we have:

The very last plot above and the equations deserve a little bit of explanation. The bottom right figure shows correlation values (un-normalized) on the y-axis and lags on the x-axis. The last plot is thus generated by computing many correlation values at each possible lag.

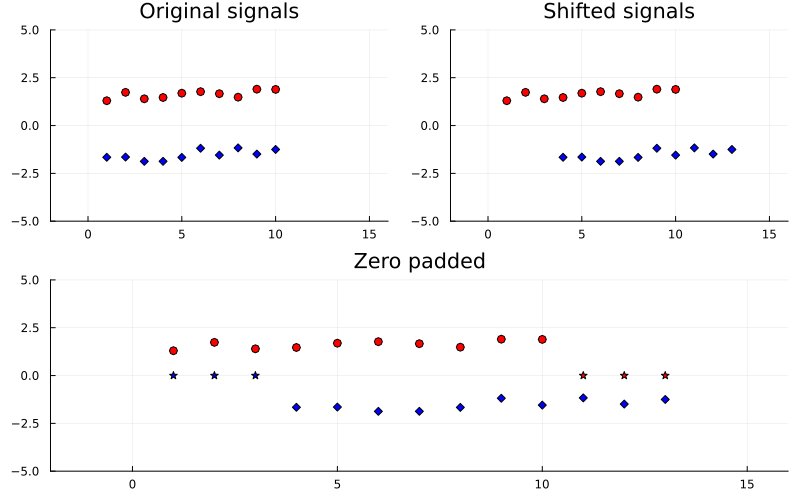

Given two signals, as in the figure below, it is not immediately clear how to implement the above formula since shifting the signal leaves it undefined what multiplication should be performed for certain indices. It is commonly accepted to zero pad the signals after shifting so that the correlation is still computed between signals of the same length. Thus, in common implementations, the lag vector ranges from to . The figure below indicates zero padding being performed for a lag of 3 units.

It is because of this implementation detail, i.e. zero-padding that the absolute value of the negative correlation at 0.375 seconds in the previous figure is less than the positive correlation at 0.125 seconds.

Note that while we visualized how to perform cross correlation with zero padding for two equal sized vectors; in reality there is nothing preventing us from adopting the same procedure for finding lagged cross correlations between vectors of differing lengths. The next exercise illustrates this case.

Question: Find the lagged cross-correlation between

Answer: For simplicity we calculate the un-normalized correlation. Given vectors of length and , we expect to perform lagged correlations. These 7 correlation calculations are shown below along with their corresponding lags:

MATLAB implementation

The following code is taken from CSSB and is an implementation of the lagged cross-correlation function for vectors of the same length with zero-padding.

function [rxy lags] = crosscorr(x,y)

% Function to perform cross correlation similar to MATLABs xcorr

% This version assumes x and y are vectors of the same length

% Assumes maxlags = 2N -1

% Inputs

% x signal for cross correlation

% y signal for cross correlation

% Outputs

% rxy cross correlation function

% lags shifts corresponding to rxy

lx = length(x); % Get length of one signal (assume both the same length)

maxlags = 2*lx - 1; % Compute maxlags from data length

x = [zeros(lx-1,1); x(:); zeros(lx-1,1)]; % Zero pad signal x

for k = 1:maxlags

x1 = x(k:k+lx-1); % Shift signal

rxy(k) = mean(x1.*y(:)); % Correlation (Eq. 2.30)

lags(k) = k - lx; % Compute lags (useful for plotting)

endIntroduction to filtering

In this section we change gears a bit and discuss filtering; a rich topic in its own right. Recall that we said the definition of signal and noise are relative terms; i.e. what is pertinent to our analysis is our signal and everything else can be termed noise. It is no surprise then that we seek to have techniques to eliminate or reduce noise levels compared to signals in our observation or analysis of systems.

Averaging to reduce noise

One easy way to reduce variability in our observation is to take multiple measurements and then take an average. This ties well with our intuition that as we consider more and more samples/observations/measurements the likelihood of us approaching the true distribution of the data increases.

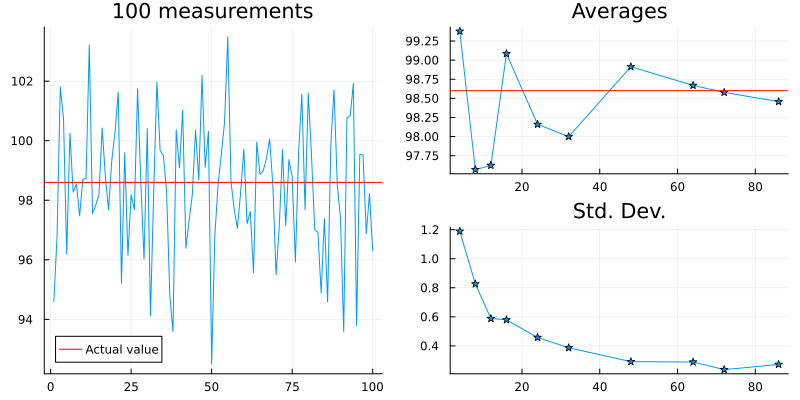

Consider the following plot which consists of multiple measurements of body temperature taken with a thermometer that is only accurate to F (not a very good thermometer). The true body temperature is marked with a blue line.

The right side panels show how increasing the number of samples and averaging them let us approach the true value over repeated measurements. Further, it can be shown that the "error" scales as when considering the mean of measurements. This observation can be verified in the above plot.

Ensemble averaging

CSSB (and therefore our course) refers to constructing a single timeseries/signal out of multiple ones by averaging them in time (technically pointwise) as ensemble averaging.

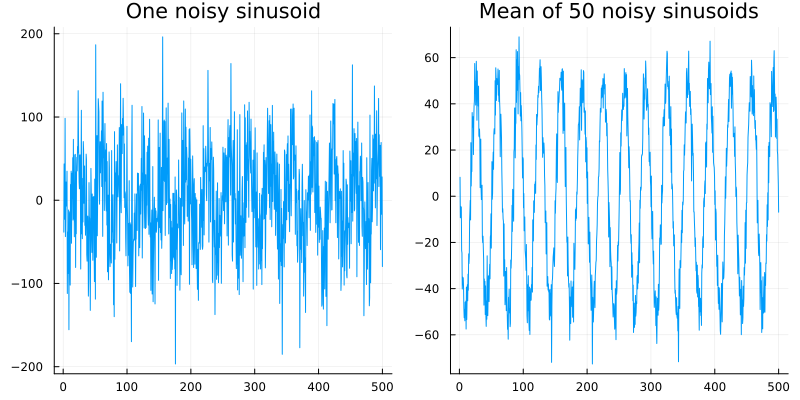

The idea here is the same: averaging reduces variability and thus might enable one to see systemic patterns not obvious by examining individual signals. Consider for example the very noisy sinusoid from Lecture 03 reproduced here in the left plot. Taking 50 such noisy sinusoids and averaging them pointwise reduces the noise artifact.

Section 2.4.4 of CSSB for further details.

| [1] | Actually it applies to all vector spaces that can be equipped with an inner product but we will not get to that level of abstraction in this course. |