Lecture 03

Reading material: Section 1.4, 2.1 and 2.3 of the textbook.

Recap

Recall that last lecture we talked about:

sources of noise and variability in biological systems, some common examples of them and how to model and address them.

Gaussian models for noise and the essence of the central limit theorem.

types of systems including deterministic, stochastic, causal, non-causal, stationary, non-stationary, linear and nonlinear systems.

properties of some deterministic signals

In this lecture, we will continue the discussion to wrap up Chapter 1 material. Then we will move towards a discussion about processing of signals, including operations on signals, some common signals and their characterizations and constructions (both in theory & code).

Modeling biological systems

The textbook discusses two different approaches to modeling biological systems as engineers. The first it calls the analog model and the second it calls the systems model. We briefly discuss the key themes in each here.

Analog(ue) approach

In this approach, we try to model the properties of the system using mechanical or electrical devices. For example, an early model of musculo-skeletal systems utilized nonlinear springs, contractile elements, and other such mechanical elements in series and parallel connections to mimic the properties and behavior shown in actual animal systems.

See Figure 1.23 in the textbook.

The model forces and velocities correspond to biological forces and velocities when mechanical elements are utilized to simulate biological mechanics. We could also do the same with electrical components if the same principle (that the defining dynamical equations are similar/identical) holds. As an example, we know from Ohm's law that a resistor provides a linear relationship between voltage & current. Then, using voltage as a stand-in for force (or pressure) and current for velocity, one can model features of the cardiovascular system with the resistance of the resistor mimicking the resistance provided by blood vessels to blood flow. Of course, blood vessels are not exactly the same as a plain old resistance since they are elastic and can expand. Thus one finds that the addition of a capacitance or the adoption of a nonlinear resistor is required to further capture such intricacies.

The "analog" in the title of this section thus is also rightly interpreted as an "analogous" approach and not just in the sense of analog vs. digital modeling.

Systems approach

In this approach, one does not seek to create models that mimic the exact behavior of a biological system with mechanical, electrical or pneumatic elements. Instead a more abstract approach is taken. Arguably, this is the more modern of the two approaches. In this conceptual framework, one wants to model the input-output behavior of different systems or system components. Rather than concentrate on the specifics of how each operation is implemented, the focus now is on accurately describing what the system does.

An important innovation in this approach is the separation of the process into a plant & a controller as well as the introduction of feedback to the system[1]. Consider a simple system that must regulate or keep some variable of interest within an acceptable reference . Here could be the velocity of your car in cruise control mode or the temperature in your home when the thermostat is set to auto or your basic body temperature. In this setting, we can consider the ECU in the car or the thermostat in your home, or the autonomous nervous system to be the controller and the engine in the car or A/C unit in the home or our body itself to be the plant. It is the job of the controller to regulate the output of the plant.

In whichever system, one can consider the reference to be the input to the system controller: mph, F or C. In the systems model, this reference is compared to the current value or output coming from the plant and an error signal generated using which the controller sends signals to the plant to modify its output. Since this output is then again measured, fed back into the system via the error signal we say that we have a feedback system (infact a negative feedback system, but that is for later lectures). The figure below illustrates this abstraction.

Elementary operations on signals

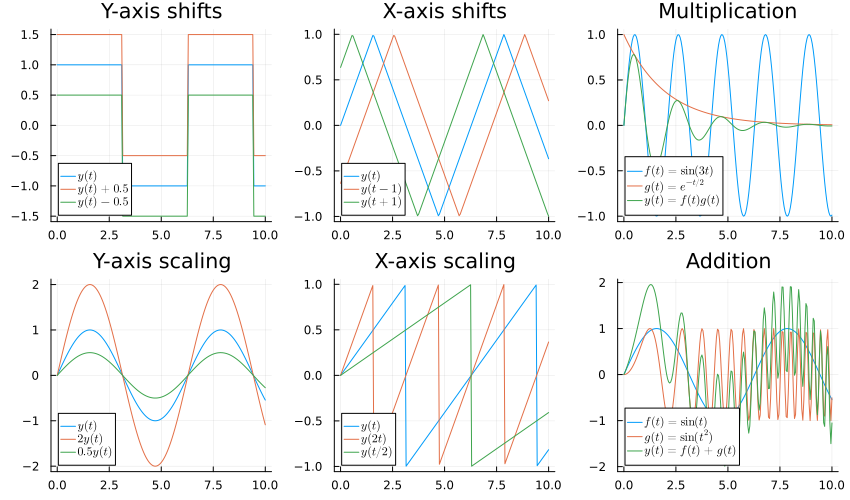

First we discuss about some elementary operations done on signals. All operations we discuss are visualized in the figure below.

Y-axis shifts (vertical shift)

The first operation we are going to discuss is about -axis shifts or vertical shifts.

Given a function , its vertical shift is a new function . In other words, all the output values change by units. If is positive, the graph will shift up. If is negative, the graph will shift down.

In the figure is the vertically shifted versions of the original signal

X-axis shifts (horizontal/time shift)

The next operation is -axis shifting or time shifting of a signal. This means that the signal may be either delayed or advanced in the time axis.

Given a function , a new function , where is a constant, is a horizontal shift of function . If is positive, the graph will shift right. If is negative, the graph will shift left.

The figure above shows the time shifted version of the signal , which is , with .

Multiplication

The next basic signal operation is Multiplication. In this case, amplitudes or output values of two signals are multiplied in order to obtain a new signal. Mathematically, this can be given as:

In the figure, is the product of two signals and .

Y-axis scaling (amplitude scaling)

The process of rescaling the amplitude of a signal, i.e., the amplitude of the signal is either amplified or attenuated, is known as amplitude scaling. The amplitude scaling of a continuous time signal x(t) is defined as,

In the above figure, as you can see, if we have amplification of signal and if we have attenuation of signal.

X-axis scaling (time scaling)

Time axis scaling of a signal is also called reparametrization[2] of . Mathematically we write where is a scalar constant. This in essence amounts to speeding up or slowing down of the signal. If then the signal is sped up and if then the signal is slowed down.

Addition

The addition of two signals is nothing but addition of their corresponding amplitudes. That is, if and are the two continuous time signals, then the addition of these two signals is expressed as .

The resultant signal can be represented as:

In the figure above, and .

The resultant signal is given by

Constructing signals

Next, we will be discussing how to construct signals. The first signal we will discuss is the sinusoid. In later lectures, we will discuss some other common signals.

Sinusoids

Sinusoidal signals are periodic functions which are based on the sine or cosine function. Note that the term sinusoid is a generic term for various curves or traces constructed using sines and cosines (which are of course related to each other)

The general form of a sinusoidal signal is

Here , , and are parameters that characterize the sinusoidal signal. When and we get what we call a simple sinusoid.

Amplitude , of the signal determines how large the signal is in the dependent axis (height of the signal measured peak-to-valley). Specifically, the sinusoid oscillates between and absent any -axis shifts. Thus, acts like a -axis scaling factor on a simple sinusoid.

Frequency of the signal measures how fast the signal is in the time or independent axis. When it is measured in radians/second (recall that the sine function takes an angle as input) it is represented by (known as angular frequency). The frequency , in Hertz (Hz) provides the number of complete cycles that the sinusoid repeats in one second. The duration of each cycle is called the period () of the sinusoid.

Mathematically, the frequency (in Hertz) of a sinusoid is simply the inverse of its period and also related to the angular frequency as follows:

Phase () of sinusoid causes a sinusoid to be time or -axis shifted.

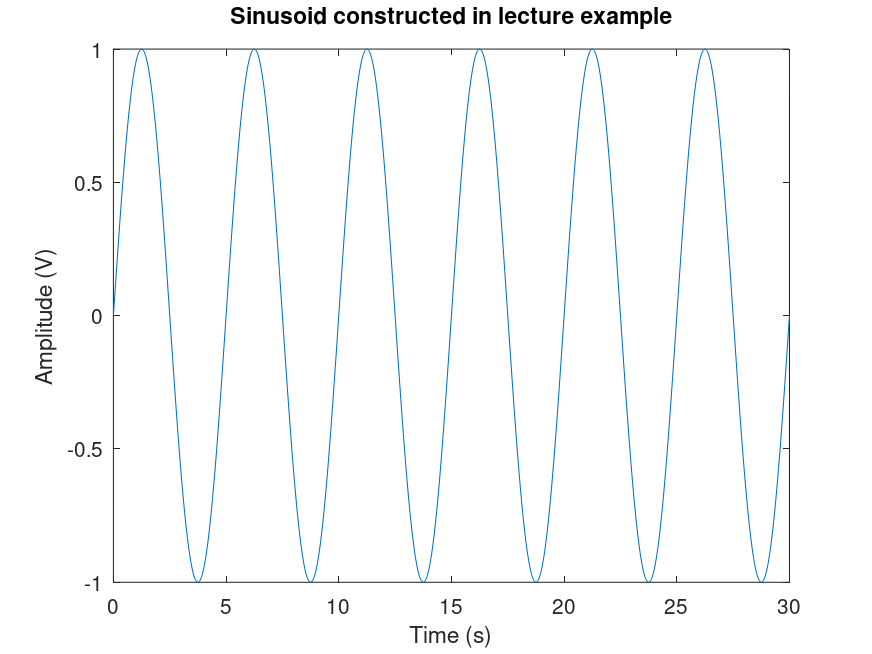

Constructing and plotting sinusoids

Mathematically, constructing a sinusoid of a given amplitude and frequency is easy. We just plug in the right numbers.

Clearly to have an amplitude of 3 units. Thus our starting guess for the form of the signal is . Now 12 cycles every minute implies Hz. Thus our signal becomes , i.e. . Since we need the signal to start at 1.5V, we can try a y-axis shift: . However this signal violates the given constraint (why?).

Thus we should try a different strategy. Let's introduce a phase shift: . Then solve for to get that the required signal is:

While mathematically constructing a sinusoid is straightforward as we have seen above, visualizing one using a computer involves a bit more work. First, recall that digital signals are not continuous time signals; but rather, they are discretized using some sampling scheme. Therefore, the first task we must complete is to define what the sampling frequency will be for our signal. Suppose we want to plot 30 seconds of the above example signal (how many cycles would you expect to see?) and we make 50 measurements every second. Thus we have,

fsample = 50;

t0 = 0;

tf = 30;

fsignal = 2*pi/5;where fsample is the sampling frequency and fsignal is the frequency of the desired signal and t0, tf denote the interval over which we wish to visualize the signal. Next we generate the time vector t=t0:1/fsample:tf and the plot it. The whole code is shown below

fsample = 50;

t0 = 0;

tf = 30;

fsignal = 2*pi/5;

t = t0:1/fsample:tf;

y = sin(fsignal*t);

plot(t, y)

xlabel("Time (s)")

ylabel("Amplitude (V)")

title("Sinusoid constructed in lecture example")xlabel and ylabel commands.

Manipulating sinusoids

It is often convenient to analytically manipulate sine/cosine waves. To add two sine waves of the same frequency just add their amplitudes together.

A similar relation exists for converting a sinusoid in terms of the sine function into a sum of pure sines and cosines as well. Therefore, now we have the procedure to analytically add sinusoids.

Review of some basics regarding

We will have the opportunity to work with Euler's formula and the complex numbers as well as complex exponentials in this course. Therefore at this stage it is instructive to review the basic laws of logarithms and exponents. This should be very familiar material.

For a real number we define, . Then, for two real numbers and two integers the following laws hold.

The logarithm is defined as an operation using the following:

One should read the operation as "logarithm to the base of ". When the base, , we replace the notation with (natural logarithm)[3].

From the above definition it is true that:

Then the following properties hold as a result:

Since the second equality in the last line above has the same base then the two exponents must be equal. Thus follows the identity.

The other identities are left as an exercise.

We assume the class is familiar with complex numbers and operations on them. Recall that while real numbers are one dimensional, the complexes are two dimensional, having both a real coordinate and an imaginary coordinate. All complex numbers therefore can be written in a Cartesian form (often written ) and as well as a polar form .

Euler's formula

Euler's formula indicates an essential and deeply profound relationship between the Polar and Cartesian forms via Euler's constant causing 19th century Harvard mathematician Benjamin Peirce to remark (after proving a particular version of it):

Gentlemen, that is surely true, it is absolutely paradoxical; we cannot understand it, and we don't know what it means. But we have proved it, and therefore we know it is the truth.

Let us therefore prove it, starting with only the supposition that a complex number as an exponent to should return a complex number[4] and examining what calculus can tell us.

Let , being a complex number, have some polar representation:

Differentiate both sides (with respect to and not assuming anything about or ) and follow the product rule of calculus:

Put definition (3) back into the left hand side of (4). Then we have,

Expanding out and collecting the real and imaginary parts we get:

For equality to hold we must have:

Therefore is a constant and where is another constant and we have:

Thus for we get Euler's formula:

Note: It is indeed periodic.

Now let us use it a couple of times.

(a) We can simply use with . Thus,

| [1] | The term plant is a relic from the old times when these concepts were first introduced in the setting of large industrial production plants (often for chemicals). |

| [2] | Technically this encompasses arbitrary changes to the timeline but we don't discuss that here. |

| [3] | It is natural in many senses that are beyond the scope of the course; but it suffices to note that is a very important mathematical constant on par with . |

| [4] | A much more natural assumption than Appendix A in CSSB which admittedly seems to start out of nowhere. |