| Name | NetID | Section |

|---|---|---|

| Larry Du | larryrd2 | ECE 120 |

| Evan Schmitz | evanls3 | ECE 110 |

Statement of Purpose

The goal of our project is to build and program a self-balancing inverted pendulum robot. This robot will be able to detect which way it is leaning using a gyroscope in an IMU. Then, it will adjust its wheels accordingly to stay balanced. In addition, it will be able to solve a simple maze, navigating with an ultrasonic sensor. Our project will be unique in that it will have to simultaneously balance and move.

Background Research

We chose this project after seeing a YouTube video of a similar inverted pendulum. The YouTube video displayed an inverted pendulum that was able to balance, but we wanted to stretch our technical limits and not only redesign and build this, but also one that can autonomously navigate. This project has a good balance of hardware (Evan in ECE 110) and software (Larry in ECE 120), so we can both contribute our strengths and skills developed in our classes. When beginning the project, we also considered a maze-navigating car and a line-following robot. We considered both of these to be too simple for the time we have to design and build our project, so we decided to integrate the maze-navigating aspect into the inverted pendulum. All ideas are autonomous robots, but all with different tasks.

Block Diagram / Flow Chart

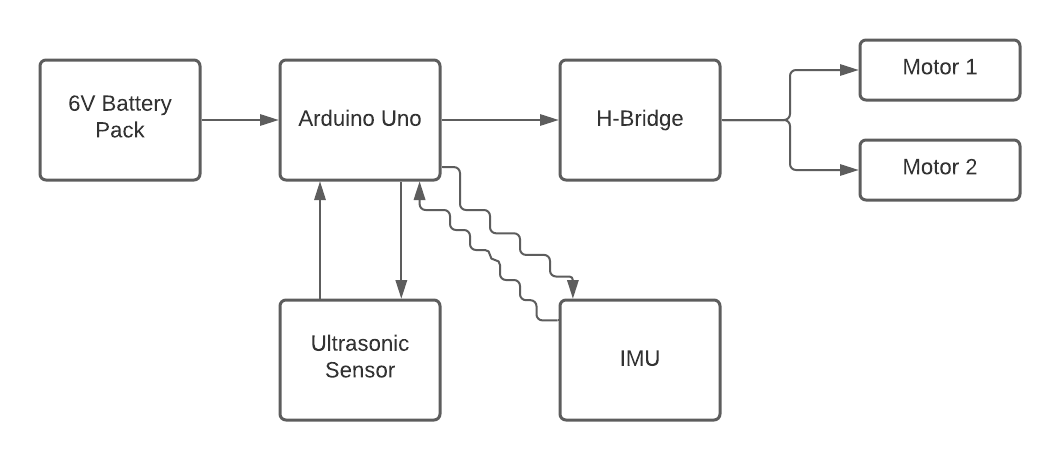

Hardware:

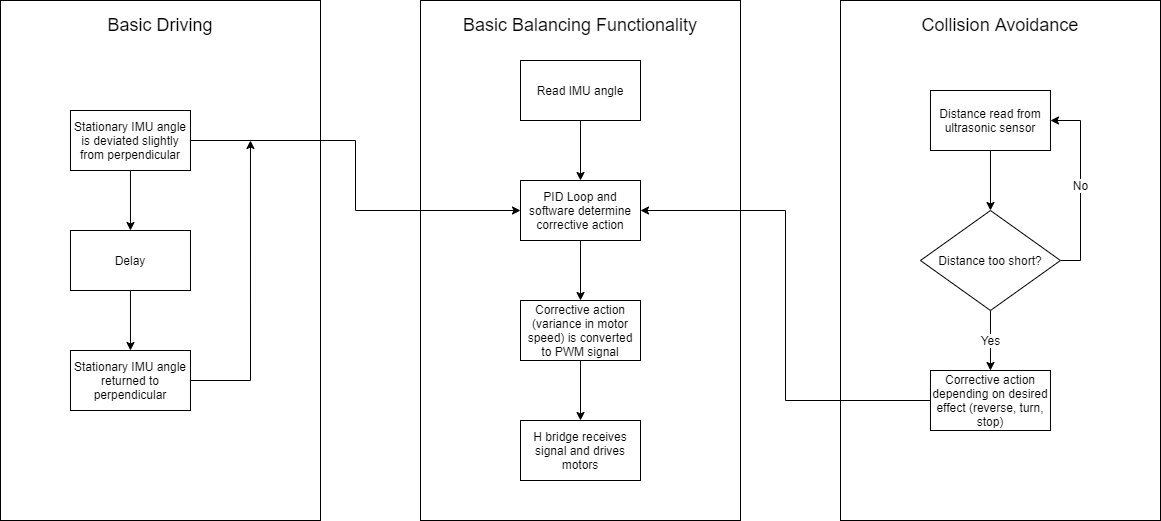

Software:

System Overview

Hardware:

This simplified block diagram represents what the hardware will actually look like. I drew a far more detailed version, but simplified it to a block diagram for the page. To explain the block diagram, the entire circuit is first powered by a 6V battery pack. The Arduino Uno will supply the IMU with 5V and will receive gyroscopic information in return. Similarly, it will also supply the ultrasonic sensor with 5V and will receive information about potential obstacles. Finally, the Arduino will power and command the h-bridge, which will power and command the motors in turn.

Software:

Software programming will be separated into three primary goals: balancing, driving, and collision avoidance, in that order. To achieve basic balancing, the software will consistently receive the angle from the IMU, determine the necessary action with a PID loop, and send a PWM signal corresponding to that action to the H-bridge, which will then drive the motors. Basic driving (forwards and backwards) will involve slightly deviating the desired IMU angle from the perpendicular, thus inducing the desired forwards/backwards lean and producing hopefully meaningful movement. The current plan is to drive autonomously, and as such a delay will be put in place before the stationary IMU angle is returned to the perpendicular, thus ending the driving sequence. Collision avoidance will require more complex algorithms, but the basic idea is to receive distance information from the ultrasonic sensor and take corrective action if the distance received is too close. The corrective action will depend on the desired effect, as an example, if we want the robot to traverse a simple maze, we could have the robot turn 90 degrees to the right (as calculated by the software) and continue driving forwards.

Parts

2* Motors

$49.90 total

Breadboard

- ECE 110 Inventory

- Free

Redboard

- ECE 110 Inventory

- Free

MPU 6050

- ECE 110 Inventory

- Free

H-bridge

Ultrasonic sensor

ECE 110 Inventory

- Free

Battery holder

ECE Supply Center

$1.78

8* Standard AA batteries

- ECE Supply Center

- $3.40 total

- Duct Tape

- ECE Supply Center

- $4.92

- Acrylic Sheet

- Screws

- ECE Supply Center

- $1.12 total

- Hot Glue Sticks

Possible Challenges

Some challenges that I foresee include learning how to use and program Arduino and working with new sensors, such as the gyroscope. Possibly the biggest challenge, however, will be deciding how to program the robot to simultaneously move forwards, backwards, and even turn while keeping itself balanced. The motors' delay will also cause some challenges as we try to quickly compensate any tipping action.

References

Inverted Pendulum Robot: https://www.youtube.com/watch?v=nxRWKAauAMo

Final Update/Results

Overall, our robot balances adequately. It balances perfectly when leaning in one direction, but as soon as it leans in the other direction, it begins oscillating quite chaotically and eventually falls over. If we support it in that problematic direction, it is able to balance for significant periods of time. We're still not sure why it's different in the two directions, considering the code was identical (until we changed it to mitigate the issue).

Regarding future plans, we didn't get around to adding the ultrasonic collision detection or basic driving functionality, as this problem plagued us for the rest of the semester.

ECE 110/120 Honors Lab Final Report

This semester in honors lab, our group decided to build a self-balancing inverted pendulum. The idea of this two-wheeled robot is that it can detect its angle from upright and move its wheels in such a way that it corrects itself and is able to keep “standing” on its own. Our project will be unique in that to make it work, we will not only have to consider the hardware and software, but also the physical appearance and aspects such as wheel size, height, and center of gravity. This focus on the physicality of the robot is a key feature, along with the challenge of staying balanced.

One of the main benefits of having a self-balancing inverted pendulum robot is as a benchmark to test various software control strategies. This was immediately prevalent once we began designing the software for controlling the robot. At first, we used only proportional control, which proved slow to react and not quite responsive enough to jerk. Next, we tested PID control, which proved much more effective, but required a lot of fine-tuning and ultimately proved only moderately successful. Of course, incorporating integral and derivative components in robot control would prove more effective than just proportional control, but the principle remains. One could use this robot to examine the effectiveness of neural networks, differential equation control, etc.

At the core, our inverted pendulum is not all that complex. After initializing all variables and beginning MPU communication in the setup, the robot is ready to start the above loop. Using the built-in wire library from Arduino, the Arduino board first reads the gyroscope and accelerometer values provided by the MPU. From there, we use these values to determine its angle from upright. This is called a PID (Proportional, Integral, Derivative) loop, where the Arduino calculates its angle based on the gyroscope and accelerometer readings. The math for this is somewhat complicated and outside of our writing experience, so we used a sample code from Instructables.com (2) to do this calculation. Next, we had to move the motors to adjust for the difference from upright. Of course, we couldn’t just turn the motors on full speed in whichever direction we were tilting, as the robot would be far too aggressive, so this needed some thought too. Our original program (the one that we wrote) used a map function from Arduino to turn the motors on faster the more it was leaning. This, however, also proved to be too aggressive because as soon as the robot started to lean too much, it would throw itself in the opposite direction and did not have the reaction time to catch itself. This is the other reason we turned to the prewritten PID code. This code also provided good math to convert the angular reading to motor speed. Below is an excerpt of the code we used to calculate the speed based on the gyroscope and accelerometer. After uploading this code, the robot worked a lot better. Finally, the motors executed this movement based on the value provided from the PID code. As this code repeats itself over and over, the robot is able to keep balancing itself.

The primary sensor we used was the MPU-6050, which featured a triple-axis accelerometer and gyroscope. We utilized the values obtained from these sensors to determine the necessary voltage to be sent to the motors, in turn producing a reactionary force that kept the robot upright. Before finishing the project, we tested the values of the MPU by displaying the readings on the serial monitor to verify they were close to the expected values, and they were.

To verify that our system worked, we performed the very simple test of visually seeing if the robot could balance. The result was that the robot could balance when leaning in one direction, but in the other direction the robot usually fell over after 5-10 seconds of leaning. Overall, however, the system exhibited moderate success, generally balancing for around five seconds without help. When given assistance in the problematic direction, the robot could balance for much longer. Additionally, the system showed characteristics of adaptive response from its PID control (the integral portion), allowing it to balance at greater efficiency over longer periods of time.

Regarding hardware challenges, there were a few minor setbacks: due to budget constraints, we had to 3D-print our own wheels; due to slow parts orders, we had to be a little innovative in what materials we used; due to poor tolerances on the printed wheels, we had to wrap duct tape around the motor axles to induce a snug fit on the wheels. These hardware problems were generally easily solved either by waiting for parts or being slightly creative in the process, such as using duct tape to increase the width of the motor axles or rubber bands for wheel traction. Once these problems were fixed, however, they did not pose a challenge in the later stages.

The real challenge we faced was in software development. Modifying the algorithm was simple, but problems arose in translating our program into tangible results. Our first main issue was an inconsistent gyroscope reading. It regularly varied +/- 10 units between ticks, which proved way too inconsistent for our purposes. To fix this, we switched to accelerometer readings, but this presented its own problem: an unusually slow reaction time from the program to the motors, which caused the robot to fall over before it even recognized that it needed to correct itself. We mitigated this problem by reducing the delay between ticks to almost 0, but it still persisted. Eventually, we switched to PID control and used a program to convert the gyroscope and other values into angular units, proving much more efficient.

The other main challenge we faced was a very strange bug encountered between the two directions of lean. When the robot leaned in one direction, it caught itself nearly perfectly, maintaining its balance. However, when the robot leaned in the other direction, the motors moved nearly twice as slow, and the robot failed to correct itself. We were very puzzled by this issue and scoured the internet in search of answers, but nothing proved adequate. In the end, we decided to mitigate the problem by artificially increasing the motor speed in the problematic direction, as seen in the figure below, but this prevented it from using the full potential of the PID control and ended up causing the robot to throw itself in unpredictable ways.

Looking back on the semester, our time in honors lab was much shorter than we expected, so there are many things that we wish we still had the time to do this semester. For example, we want to keep looking at and using different programs to learn more strategies about how we could approach this. As mentioned, we started by writing our own program, but using a prewritten PID program proved to be much more accurate and effective. As mentioned, there are still problems with the program we ended up using, such as the inability to balance in one direction, despite the code being perfectly mirrored for both directions. I think looking at more programs like this will allow us to improve the robot and learn more about Arduino programming and the different strategies that we can use to tackle a new problem. At the beginning of the semester, we also planned to make our robot able to navigate a simple maze utilizing an ultrasonic sensor. Unfortunately, we didn’t have the time to get around to this, so this would be another way to extend the project.

Appendix

Figure 1. Basic software flowchart.

References

[1] HowToMechatronics. 2021. Arduino and MPU6050 Accelerometer and Gyroscope

Tutorial. [online] Available at:<https://howtomechatronics.com/tutorials/arduino/arduino

and-mpu6050-accelerometer-and-gyroscope-tutorial/> [Accessed 8 December 2021].

[2] Instructables.com. 2021. Self Balancing Bot Using PID Control System. [online] Available

at: <https://www.instructables.com/Self-Balancing-Bot-Using-PID-Control-System/>

[Accessed 8 December 2021].

Video

https://drive.google.com/file/d/1d4cdlgiKePLxF0iLfJXqNR7u0OVW8kba/view?usp=sharing