Meet the Team

Logan Power, lmpower2, ECE 110

William Walters, wcw5, ECE 110

Juhnze (Joseph) Zhu, junzhez2, ECE 120

Meets Tuesday, 6-7PM

Project Proposal

- Introduction

Everyone drums on a table with their fingertips at least once in a while, from budding musicians to tone-deaf ECE majors. It always sounds great in your head, but to everyone else it sounds at best mundane and at worst spectacularly annoying. To us, this problem is solvable in a way that doesn't require putting everyone's hands in casts.

Our solution is a MIDI device that recognizes vibrations from a table, say, from someone drumming on it with their fingers, a pen, etc. and outputs a (ideally configurable at some point given enough time) MIDI track with drum beats. This MIDI track could be saved to a device or sent directly to a computer with a MIDI synthesizer application for immediate, amazing sounding playback. With enough time, the device could be configured to have different frequency ranges picked up on the microphone correspond to different MIDI tracks with different kinds of percussion instrument associated with the ranges, e.g. your fist could be a bass kick and your pen could be a high hat. - Design Details

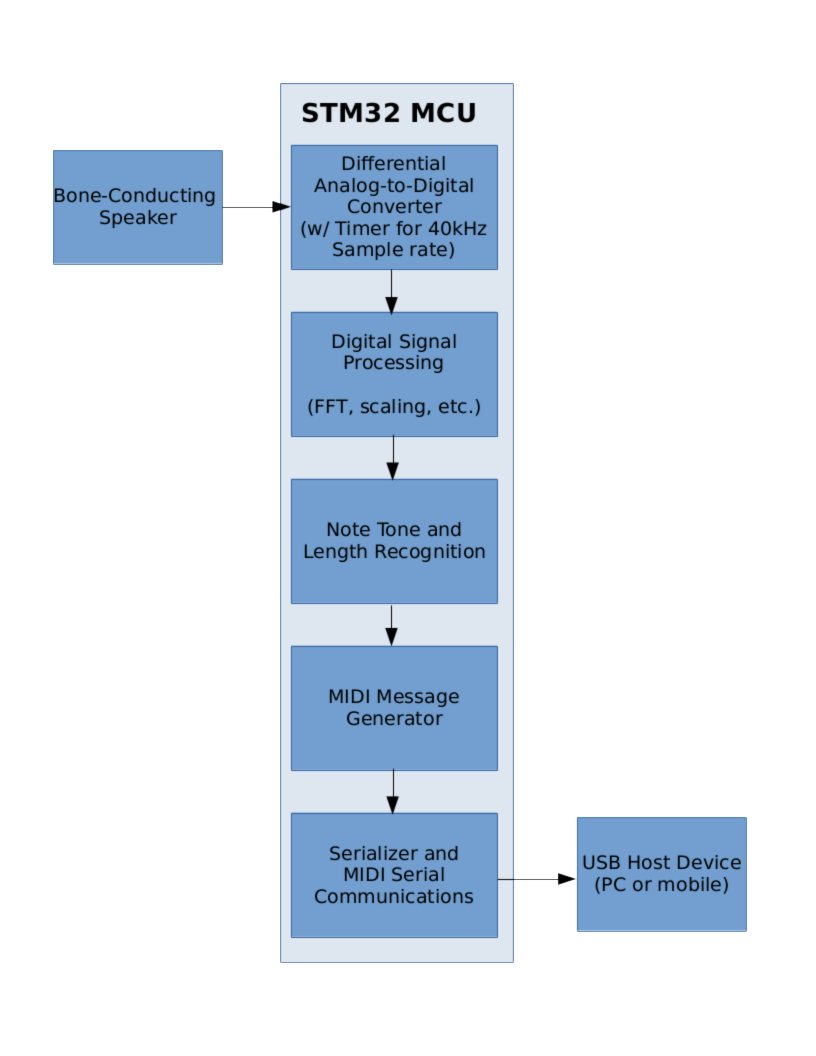

- Block Diagram

- System Overview

A button with a toggle flip-flop enables the device to listen to vibrations picked up by the bone-conducting speaker/microphone. When a user taps their finger on a table, the vibrations in the table will be picked up by the microphone. The signal from the microphone will be passed to the differential ADC on the STM32 microcontroller. The ADC will sample at 40kHz and convert the analog signals from the speaker into digital values. These digital values are stored in memory, until the signal processing software runs a Fast Fourier Transform and other signal processing operations on the data in its loop. (The data acquisition happens asynchronously to the software loop.) The software then attempts to recognize a tone in the recieved data, and see if the tone is part of a previously begun note or a brand new one. Information about new or finished drum beats goes to the MIDI message generator, which places the information in the format recognized by MIDI synthesizers. The MIDI message is then serialized and sent over USB to a host device, such as a PC or mobile device with a MIDI synthesizer installed.

- Block Diagram

- Parts

A draft of parts includes:- bone conduction speaker (model pending)

- STM32L4 Nucleo evaluation board: NUCLEO-L452RE-P

- Nucleo boards are inexpensive Arduino pin-compatible evaluation boards for STM32 microcontrollers (ARM Cortex-M).

See more information here: https://www.st.com/en/evaluation-tools/stm32-nucleo-boards.html

- Nucleo boards are inexpensive Arduino pin-compatible evaluation boards for STM32 microcontrollers (ARM Cortex-M).

- Debounced pushbutton switch

- T-flip flop IC

- Possible Challenges

The most likely and daunting challenge is being able to process the audio in a way that is able to differentiate the different types of hits (thumb vs fingertip for example) enough to be able to map them to different midi voice channels. We hope to overcome this challenge by picking up the upper harmonics of the various hits and using that to differentiate between them when the fundamental is not enough. In addition we may be able to use more audio pickup devices to supplement the vibration speaker we plan to use.

Source Code

The source code for this project is available on the university GitLab server here: https://gitlab.engr.illinois.edu/lmpower2/beatboi

Please note that you must be logged in to GitLab with your university AD credentials in order to access the project.