Meet the Team Members

| Members | NetID | Class |

|---|---|---|

| Ryan Libiano | libiano2 | ECE110 |

| Amudhini Pandian | ramyaap2 | ECE120 |

| Omar Camarema | ocamar2 | ECE110 |

| Muthu Ganesh Arunachalam | muthuga2 | ECE110 |

Purpose

The purpose of this project is to create a relatively cheap, small form factor NDVI camera that can be deployed on high altitude photographic platforms. Not only will it have the ability to process the images locally, but it will be able to geotag them for future reference. We want the system to have the same ease-of-use and ergonomics as a Go-Pro camera and other point-and-shoot cameras on the market. We ultimately plan to make this system open-source for farmers in relatively poor and isolated areas to create their own NDVI heatmaps of their crops and plants. This system can also have applications in other near-IR uses such as lowlight agricultural spectroscopy, in medical uses such as portable cerebral NIRS, and in low-budget astronomical spectroscopy. (Wikipedia)

Background

This system is rooted in using modified cameras to spot specific wavelengths of near-IR light (780nm to 2500nm). This is especially useful in applications where you need to deduce certain chemical components or extract chemical information since certain compounds reflect certain amounts of near-IR light. In plants, chlorophyll absorbs visible light, and the cell structures of leaves reflect near-IR light; this means that near-IR imaging can be used to check the health of plants from a distance. Near-IR imaging is useful for our application of checking plants' health since you can probe a bulk of the material at a distance without prior need to prepare samples(i.e. a big cornfield or a grove of trees). (Wikipedia) Things become problematic, however, since raw near-IR imaging is not a sensitive technique. (Wikipedia) These images need to process in order to grab needed information from these images. This requires specific algorithms, such as the NDVI algorithm, in order to grab wanted data. NDVI or Normalized Difference Vegetation Index is a process that subtracts the value of NIR light to that of visible red light. This difference could be used to graphically indicate which plants are healthy relative to other plants around it. Through this process, the measurements taken from a modified NIR camera could analyze and assess whether or not the subject being tested contains live green vegetation. This specific type of image processing needed for our project is well documented online and has been tried and tested by researchers and enthusiasts alike. We will not be using an existing NDVI calculation library, as one does not exist. Instead, we will be using NumPy and python-OpenCV to write a program that subtracts the NearIR light and visible red light from an image and creates a colormap of the relative health of plants.

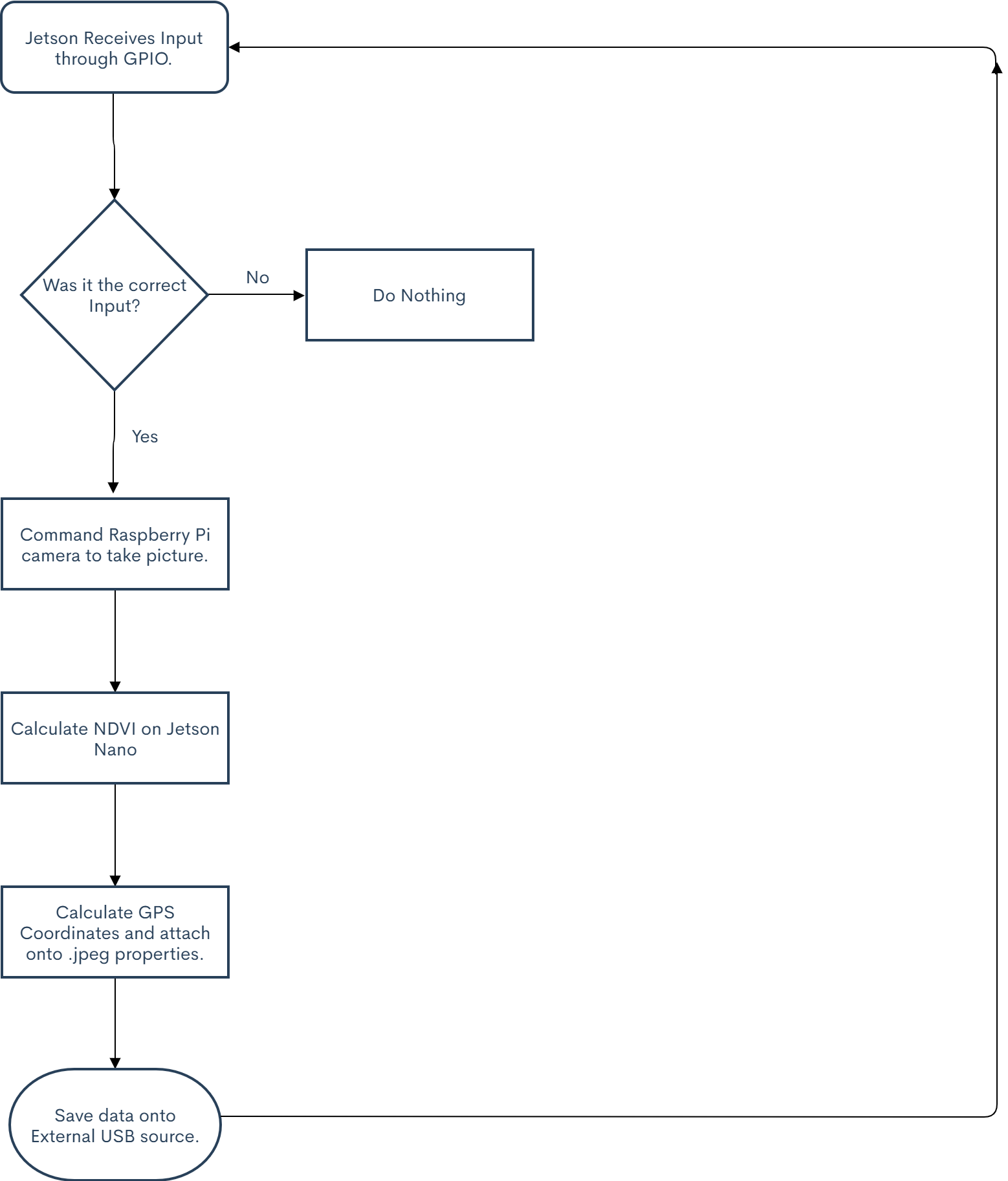

Flow Charts

Systems

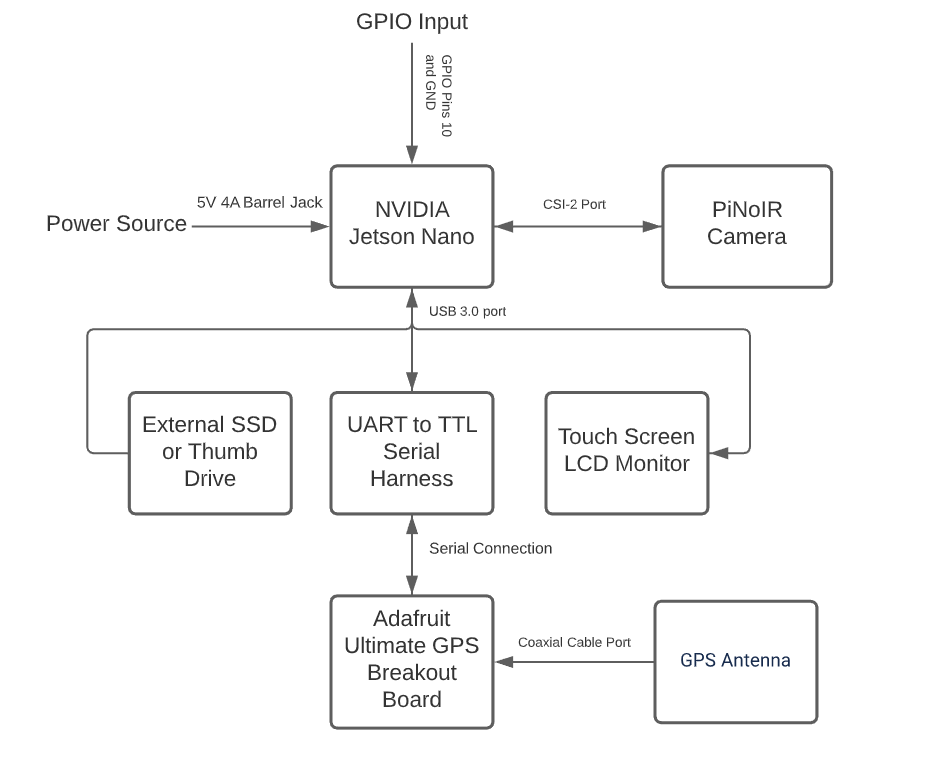

All the processing of the NDVI imaging will be done locally on an NVIDIA Jetson Nano Developer Board. This will be connected to a singular PiNoIR camera (Raspberry PI 2 camera with IR filter omitted) with a red/blue filter attached to capture the specific near-IR wavelengths. Using OpenCV, the images will be processed using the NDVI algorithm and will cross-reference to a database in order to overlay a heatmap of the health of plants on the captured image. While this process is happening, all the images will be geotagged using the Adafruit Ultimate GPS Breakout board and, once the images are analyzed, will be saved to an external SSD or flash-drive over USB. Once the images are done being captured, the data can be transferred over to a computer and using Orthoimage, the images can be interlaced (this is the case if mounted on a drone or other high altitude platform). This whole process will be commanded by either a button or through a shutter control input through the Jetson Nano's GPIO. Ultimately, we want this sensor to be compatible with most commercial drone systems on the market right now.

Parts List

Challenges

We expect to seek different challenges during our design and prototyping process. To start, the Adafruit GPS Breakout Board needs to be optimized and modified for use with an NVIDIA Jetson Nano since drivers are not natively supported on Ubuntu 18.04 LTS; however, there is a quick fix available on the internet. Another problem we may face is having to calibrate the OpenCV code with the specific IR discrepancies present in Urbana-Champaign, which means that every time the system is deployed certain values within the NDVI calculation has to be changed to create a readable heatmap. Finally, we need to create code that wraps all the processes together; this will mix all the different sensor processes, such as image processing and geotagging, into one command that will be triggered by a physical input. We expect the challenges to be roadblocks, but we could diagnose these problems with the help of guides and forums that have solutions.

Updates

November 23rd 2020

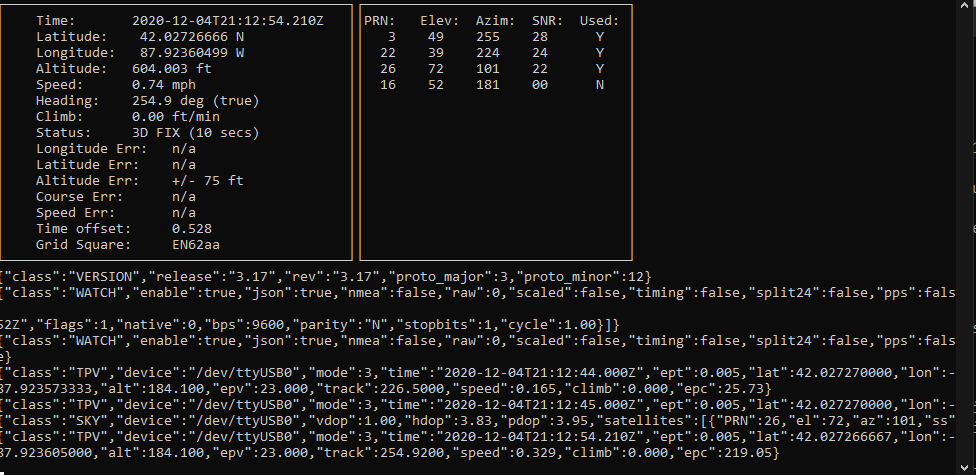

Update: We have now received parts for the camera and are preparing code to first be deployed on the laptop. In the mean time, some of us on the sensor side of the total project are helping out the drone side of the project with assembling and automation of the actual drone itself. For the time being, we first need to get the GPS to communicate with the Jetson. We setup the TXD and RXD pins on the J50 header to go into the RX and TX (respectively) pins of the Adafruit Ultimate GPS Breakout Board. After many trials and testing, we finally got the GPS to pickup a fix (which is great, since the GPS required a USB to TTL converter since the UART of the Jetson is a little dodgy. The script for image processing the NDVI images works and runs on a Raspberry Pi Zero W (and at a whopping 1920x1080 still frame per 120 seconds too!) which is great, since that means the Jetson would easily be able to process those images in real-time and a reasonable resolution (640x480 for viewfinder, 1920 x 1080 for video capture and hopefully a 3280 × 2464 still frame image). For the time being, time will be put into writing the script to work on the Jetson.

November 25th 2020

The GitHub repo is now up and live, yet the code still requires some more decoding. We have considered adding noise reduction via the Python Imaging Library (PIL), however on the Raspberry Pi 4B+ (4GB Model) this process is often taxing on performance and hinder the live video viewing. We have done away with using the LANCZOS algorithm and instead accepted the amount of noise coming from background NIR light. It does not ruin the images and gives the plants a nice aura and glow. I have built a new version of OpenCV to support CUDA and GStreamer since the one that comes with the SDK is faulty. Ultimately, we hope the camera can work before reading week.

Link to GitHub Repo: https://github.com/ryanlibiano2/multispectralcamera

December 3rd 2020

We have just assembled the actual unit and it is not looking too shabby. The next stage of development after the code runs in completeness is to create a camera style enclosure. Since we are off campus and access to 3D Printers is scarce, we are instead going to use a cardboard box and make an enclosure out of there. In other news, there seems to be a repeated bug with OpenCV when we try to handoff the image from GStreamer to OpenCV. The next thing I may try is to A. Install Jetpack SDK again or B. Try to rebuild all the code dependencies. Hopefully we can add CUDA support for accelerated processing. For the time being however, the code is giving us trouble and it may require a rewrite to work on either the Raspberry Pi (which hopefully is not the case since it ruins the scope of the project) or to hope that a rebuild of the OS or it's dependencies will help it get past it's bugs.

References

https://publiclab.org/wiki/ndvi

https://hackaday.com/tag/geotagging/

https://www.instructables.com/id/DIY-Plant-Inspection-Gardening-Drone-Folding-Trico/

https://publiclab.org/notes/it13/11-15-2019/how-to-calculate-ndvi-index-in-python

http://ceholden.github.io/open-geo-tutorial/python/chapter_2_indices.html