Group:

Alice Getmanchuk, NetID: aliceg3, ECE120

Jerry Balan, NetID: agbalan2, ECE120

Project Proposal for the Small Autonomous Vehicle with Accelerated Graphical Evaluation (SAVAGE)

Introduction

Statement of Purpose

We are making a small autonomous car that uses computer vision and various sensors to detect the environment around it and adapt its driving patterns avoid obstacles. We will make the car remote controlled at first then develop and advance the autonomous capabilities as time allows.

Background Research

Our project idea stems from our interest in interfacing between hardware and software. Autonomous vehicles are gaining more of a public spotlight as companies like Tesla and Waymo (a driverless truck company under Google) grow the industry. These companies and the news surrounding them inspired us to pursue a project involving AI or autonomy. We were also thinking of doing a quadcopter or remote controlled drone, but ended up choosing the car as it is less risky to test and we want to explore neural networks. Our goal was also to have it in as small of a form factor as possible without sacrificing performance.

Design Details

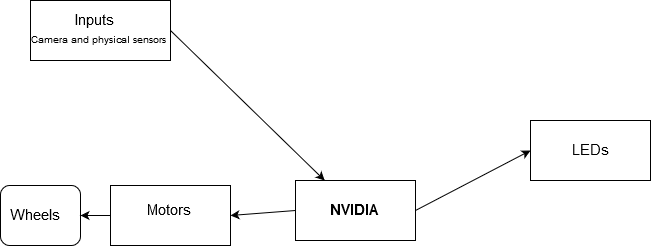

Block Diagram / Flow Chart

System Overview

We are using the Nvidia Jetson Nano Dev Kit to interface between the software and hardware. The Jetson Nano runs a customized version of the Ubuntu Linux distro called Linux4Tegra and we can utilize that to take data from the camera and any other sensors that we may have, analyze it, and have the appropriate signals transmitted to the wheel motors so the car avoids obstacles or stays on a path. We will analyze the data using computer vision. Our current plan for implementing the AI to do the computer vision is to use Python to process the images (using the OpenCV and Tensorflow libraries). We will also have onboard power using a battery pack, however we do not expect it to last a long time as we are trying to constrain the physical size of this project as much as possible.

Parts

Nvidia Jetson Nano Dev Kit

USB Type-A to Type-B cable

Wifi USB

MicroSD card

Small chassis (GoPiGo frame potentially)

Motors

Wheels

Battery pack

Raspberry Pi Camera Module V2

Possible Challenges

Programming the neural network to make this car autonomous is going to be very difficult unless we narrow down the scope and variation of the environment significantly. If we have time, expanding the scope of the car’s autonomy is high on the priority list.

Creating a unique project may also be a possible challenge. With the ECE110 car challenge and the current autonomous car projects out there, it may be difficult to come up with something that is both attainable with the current knowledge and resources available and also innovative. We are distinguishing our project from the ECE110 final car challenge by using computer vision and AI rather than proximity sensors to automate the driving. This also allows us to have more flexibility in the behavior of our car as we can change the software more easily than the hardware.

References

[1]"DeepPiCar — Part 1: How to Build a Deep Learning, Self Driving Robotic Car on a Shoestring Budget", Medium, 2019. [Online]. Available: https://towardsdatascience.com/deeppicar-part-1-102e03c83f2c. [Accessed: 20- Sep- 2019]

[2]"feicccccccc/donkeycar", GitHub, 2019. [Online]. Available: https://github.com/feicccccccc/donkeycar. [Accessed: 21- Sep- 2019]

[3]"hamuchiwa/AutoRCCar", GitHub, 2019. [Online]. Available: https://github.com/hamuchiwa/AutoRCCar. [Accessed: 20- Sep- 2019]

[4]"Python Programming Tutorials", Pythonprogramming.net, 2019. [Online]. Available: https://pythonprogramming.net/robotics-raspberry-pi-tutorial-gopigo-introduction/. [Accessed: 20- Sep- 2019]

[5]"Self-driving RC car: build using Raspberry Pi with TensorFlow & OpenCV open-source software", The MagPi Magazine, 2019. [Online]. Available: https://www.raspberrypi.org/magpi/self-driving-rc-car/. [Accessed: 20- Sep- 2019]