Programming Project #2: Image Alignment

Images of the Russian Empire: Colorizing the Prokudin-Gorskii

photo collection

CS498: Computational Photography

Due Date: 11:59pm on Monday, Sept. 20, 2010

Background

Sergei Mikhailovich

Prokudin-Gorskii (1863-1944) [Сергей

Михайлович

Прокудин-Горский,

to his Russian friends] was a man well ahead of his time. Convinced, as early

as 1907, that color photography was the wave of the future, he won Tzar's

special permission to travel across the vast Russian Empire and take color

photographs of everything he saw. And he really photographed everything:

people, buildings, landscapes, railroads, bridges... thousands of color

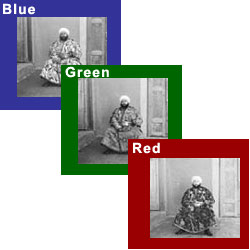

pictures! His idea was simple: record three exposures of every scene onto a

glass plate using a red, a green, and a blue filter. Never mind that there was

no way to print color photographs until much later -- he envisioned special

projectors to be installed in "multimedia" classrooms all across

Russia where the children would be able to learn about their vast country.

Alas, his plans never materialized: he left Russia in 1918, right after the

revolution, never to return again. Luckily, his RGB glass plate negatives,

capturing the last years of the Russian Empire, survived and were purchased in

1948 by the Library of Congress. The LoC has recently digitized the negatives

and made them available on-line.

Overview

In this project, you will apply the concepts of template matching and

coarse-to-fine search, which are key techniques for object recognition, tracking,

optical flow, and a variety of other useful processes and applications. Here, your

goal is to take the digitized Prokudin-Gorskii glass

plate images and, using image processing techniques, automatically produce a

color image with as few visual artifacts as possible. In order to do this, you

will need to extract the three color channel images, place them on top of each

other, and align them so that they form a single RGB color image. Some starter

code is available here;

do not feel compelled to use it. We will assume that a simple x,y translation

model is sufficient for proper alignment. However, the full-size glass plate

images are very large, so your alignment procedure will need to be relatively

fast and efficient.

Details

A few of the digitized glass plate images (both hi-res and low-res versions)

will be placed in the following zip file (note that the filter order from top

to bottom is BGR, not RGB!): data.

Your program will take a glass plate image as input and produce a single color

image as output. The program should divide the image into three equal parts and

align the second and the third parts (G and R) to the first (B). For each

image, you will need to print the (x,y) displacement vector that was used to

align the parts.

A few of the digitized glass plate images (both hi-res and low-res versions)

will be placed in the following zip file (note that the filter order from top

to bottom is BGR, not RGB!): data.

Your program will take a glass plate image as input and produce a single color

image as output. The program should divide the image into three equal parts and

align the second and the third parts (G and R) to the first (B). For each

image, you will need to print the (x,y) displacement vector that was used to

align the parts.

The easiest way to align the parts is to exhaustively search over a window

of possible displacements (say [-15,15] pixels), score each one using some

image matching metric, and take the displacement with the best score. There is

a number of possible metrics that one could use to score how well the images

match. The simplest one is just the L2 norm also known as the Sum of Squared

Differences (SSD) distance which is simply sum(sum((image1-image2).^2)).

Another is normalized cross-correlation (NCC), the dot product of the two vectors after normalizing them to be zero-mean, unit-deviation (see slides for Sept 2). Note

that in this particular case, the images to be matched do not actually have the

same brightness values (they are different color channels), so a cleverer

metric might work better.

Exhaustive search will become prohibitively expensive if the pixel

displacement is too large (which will be the case for high-resolution glass

plate scans). In this case, you will need to implement a faster search

procedure such as an image pyramid. An image pyramid represents the image at

multiple scales (usually scaled by a factor of 2) and the processing is done

sequentially starting from the coarsest scale (smallest image) and going down

the pyramid, updating your estimate as you go. It is very easy to implement by

adding recursive calls to your original single-scale implementation.

Your job will be to implement an algorithm that, given a 3-channel image,

produces a color image as output. Implement a simple single-scale version

first, using for loops, searching over a user-specified window of

displacements. The above-linked skeleton Matlab code will help you

get started. Next, add a coarse-to-fine pyramid speedup to handle large images.

Bells & Whistles (Extra Points)

Although the color images resulting from this automatic procedure will often

look strikingly real, they are still a far cry from the manually restored

versions available on the LoC website and from other professional

photographers. Of course, each such photograph takes days of painstaking

Photoshop work, adjusting the color levels, removing the blemishes, adding contrast,

etc. Can we make some of these adjustments automatically, without the human in

the loop? Feel free to come up with your own approaches or talk to me about

your ideas. There is no right answer here -- just try out things and see what

works.

Idea 1 (10 pts): The borders of the photograph will have strange colors

since the three channels won't exactly align. See if you can devise an

automatic way of cropping the border to get rid of the bad stuff. See an example

on the bulletin board.

One possible

idea is that the information in the good parts of the image generally agrees

across the color channels, whereas at borders it does not.

Idea 2 (10 pts): Try to automatically adjust the colors to make the pictures more vivid or true.

Try out some of the color spaces and correction methods discussed in the "Color and Lighting" lectures.

Align with FFT (20 pts): Remember that SSD can be performed with filtering operations (expand the quadratic). Thus, for large templates, you may be able to compute SSD more efficiently using the Fourier transform (discrete fft is nlogn). Write code to find the minimum alignment under SSD using the fast Fourier transform (FFT). For one large example, compare the run-time to your coarse-to-fine search runtime. Note that you should take care to provide sufficient padding (as an argument to fft2 in Matlab) and note that if you filter an image (im) with a filter (fil) using fft2/ifft2 with padding, the output will be shifted by half of the filter width.

Deliverables

To turn in your assignment, place your index.html file and any supporting media in your project directory: netid.projects.cs.illinois.edu/cs498dwh/proj2/index.html, where "netid" is your netid (e.g., dhoiem). Also, e-mail me the zipped code in "netid_proj2.zip" and include a link to the project page in the text. See project instructions for details. In the e-mail, tell me how many points you think you should get for the core project and any bells and whistles.

The web page should contain:

- A brief description of the problem and how you aligned the images using each method (this could be just a few sentences). Be sure to mention any special processing (what kind of distance is used, any cropping, filtering, etc.).

-

The result of your algorithm on all of our example

images, as well as one or two of your own choosing. If your algorithm

failed to align any image, provide a brief explanation of why. Display the alignments with the images (e.g., "g=[20 -5] r=[32 7]").

- For one image, show a close-up view of one part of the image (e.g., a part with an edge) so that we can see how good the alignment is.

- Any bells & whistles you choose to add.

Scoring

The basic assignment is worth 100 points, as follows:

- 50 points

for a single-scale implementation with successful results on low-res

images.

- 40 points for a multiscale pyramid version that works on the

large images.

- 10 points for discussion of method and difficulties and clarity.

Additionally, you could earn up to 40 points for the bells and whistles explicitly mentioned above and up to 10 points for extensions that you come up with on your own (check with prof first).

Final Advice

- Useful Matlab functions: circshift, imresize or imfilter

- Try to find one set of parameters that works well overall. Don't worry about tweaking to get each individual example perfect.

- The borders can be pesky, so you might want to ignore them when evaluating the alignment.

- Save the results as jpegs with limited resolution to save space.

Acknowledgements

Many thanks to Alyosha Efros for designing this project, used here with permission.

A few of the digitized glass plate images (both hi-res and low-res versions)

will be placed in the following zip file (note that the filter order from top

to bottom is BGR, not RGB!): data.

Your program will take a glass plate image as input and produce a single color

image as output. The program should divide the image into three equal parts and

align the second and the third parts (G and R) to the first (B). For each

image, you will need to print the (x,y) displacement vector that was used to

align the parts.

A few of the digitized glass plate images (both hi-res and low-res versions)

will be placed in the following zip file (note that the filter order from top

to bottom is BGR, not RGB!): data.

Your program will take a glass plate image as input and produce a single color

image as output. The program should divide the image into three equal parts and

align the second and the third parts (G and R) to the first (B). For each

image, you will need to print the (x,y) displacement vector that was used to

align the parts.