|

CS440/ECE448 Fall 2025MP 8: HMM Spelling Correction 2Due date: Friday November 7th, 11:59pm |

For this MP, you will improve the spelling correction HMM from MP7 using bigram (2-character) context.

Basic instructions are the same as in previous MPs:

In this MP, you will improve your HMM-based spelling corrector from MP7.

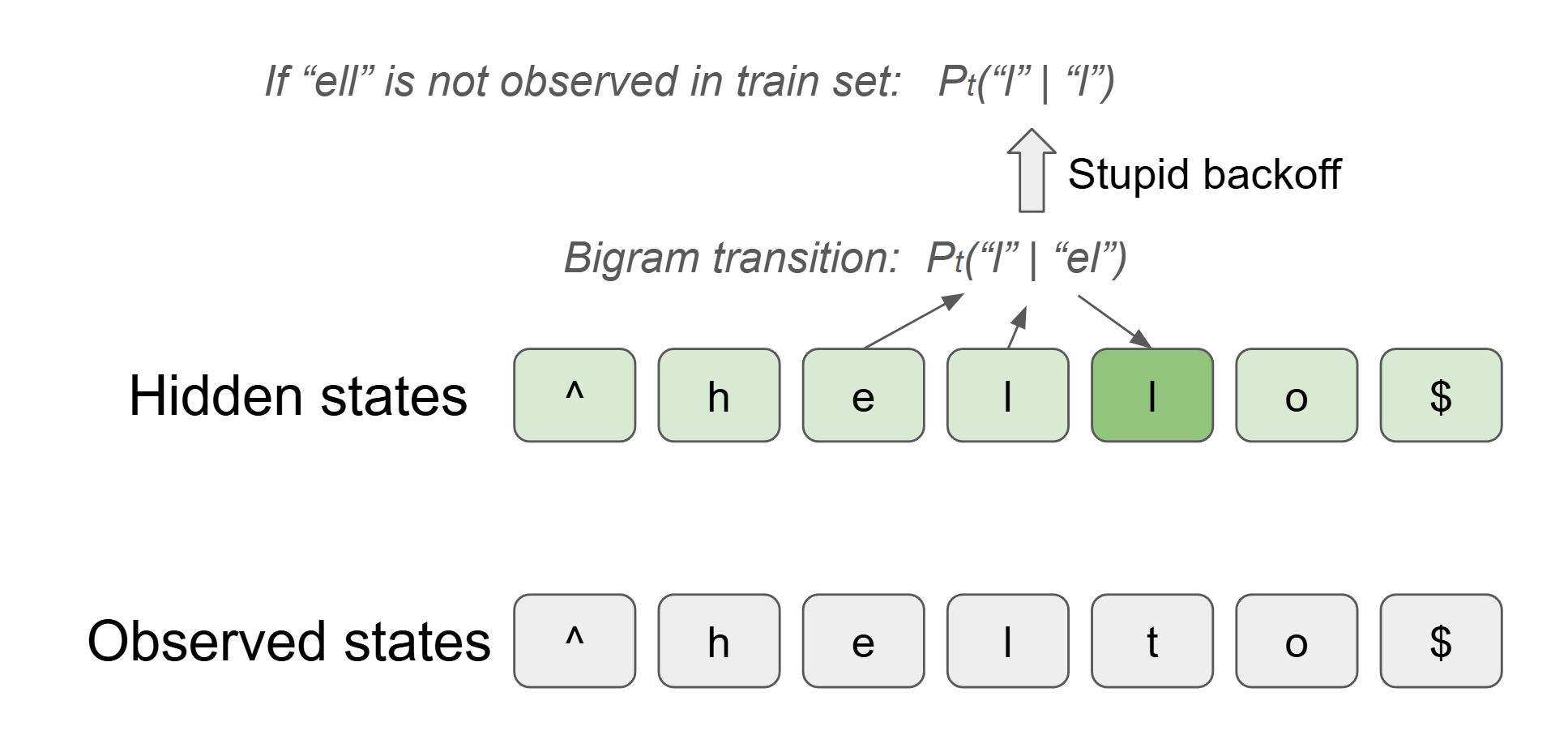

The observed states and hidden state remain the same as in MP7: each state is a single character in the possibly incorrect / ground-truth word, respectively.

However, you will now use bigram context to compute transition probabilities. That is, the probability of the next hidden character will depend on the previous two hidden characters, rather than just the previous one. This means that your transition probabilities will be of the form: P(next hidden char | current hidden bigram). (Note: this is also called `second order HMM` in literature.)

One important functionality you need to implement is Stupid Backoff, which handles the sparsity of bigrams. When a bigram is not observed enough in the training data, you should fall back to the unigram probability of the last character in the bigram. For example, suppose that the bigram "el" is only observed a few times before the target char "l" in the training set, i.e. P( "l" | "el" ) is less than bigram_count_threshold). Then you should use the unigram transition probability of P( "l" | "l" ), with a backoff penalty. You should also use the unigram transition probabilities for the first character of the word ("^hello$" -> "h") where you only have one character of context ("^"). See the section on Viterbi_2 below for more details.

The provided script for MP8 (mp8.py) reads data from two files (--train and --test). Your viterbi_2() function (in viterbi_2.py) will be given these data, where it calls the training_bigram() function to compute the parameters of your HMM from the training data. It will run the Viterbi algorithm on the test data and return the predicted correct spellings. Note: You are REQUIRED to implement the `viterbi_stepforward_bigram()` function, which computes a single column of the Viterbi trellis. This function will be called by your `viterbi_2()` function. We will test the `viterbi_stepforward_bigram()` function separately.

The provided script will compute the character error rate (CER) against the ground-truth answer and your HMM's predictions. CER is the sum of inserted, deleted, and substituted characters there are in a word divided by the number of characters in the word, where the lower is better. The autograder will show your CER and the passing threshold for each test case.

You will need to fill in three functions in viterbi_2.py. Most of them share the core structure with viterbi_1.py, so you can refer to your MP7 code as needed.

The code package (and data) for the MP (template.zip) contains these code files:

The code package contains the following training and development data:

python3 mp8.py --train data/tiny_typos_test.txt --test data/tiny_typos_train.txt

The program will run the algorithm and report one metric:

You will be submitting one file to Gradescope: viterbi_2.py. Each of these submit points times out after 10 minutes. Bear in mind that your laptop may be faster than the processor on Gradescope.

The Gradescope autograder will run your code on the development dataset from the supplied template and also a separate (unseen) set of data from the same corpus. In addition, your code will be tested on one or more hidden datasets that are not available to you, which may have different set of characters from the ones provided to you.

Do NOT hardcode any of your important computations, such as transition probabilities and emission probabilities, character set, etc.

This section is identical to the corresponding section for MP 7.

The following is an example of the set of possible hidden states in your HMM, corresponding to the intended (correct) characters in a word. Remember: you should not hardcode anything about this character set, because we will test your code on other datasets with different alphabets or symbols.

The provided code converts all words to lowercase when it reads in each sentence. It also adds two dummy words at the ends of the sentnce: ^ (START) and $ (END). These tags are just for standardization; they will not be considered in accuracy computations.

Since every word begins with ^, the initial probabilities for your HMM can have a very simple form, but you should not hardcode them into your program. Your code also needs to learn the transition probabilities (between correct characters) and the emission probabilities (mapping each correct character to a possibly mistyped observed character). The transitions from ^ encode the likelihood of starting a word with each possible first letter.

Most of the structure of Viterbi_2 is the same as Viterbi_1 from MP7. However, we have a different formulation for the transition probabilities.

MP7 (unigram transition):

MP8 (bigram transition + stupid backoff).