In this assignment you will explore some fundamental concepts in Machine Learning. Namely you will fit functions to data to minimize some loss function. You will see a few different ways to compute such functions (analytically, gradient descent, and stochastic gradient descent). Using the same very simple implementation of gradient descent you will do linear regression, polynomial regression, inverse kinematics for a planar robot arm, and classification via logistic regression.

This assignment is structured more like a tutorial than an

assignment. To get full credit you just need to pass all tests in

tests.py and we highly recommend you work incrementally by

following the notebook provided in mp9.ipynb. All

instructions are in this notebook as well as in comments in

mp9.py.

To get started on this assignment, download the template code. The template contains the following files and directories:

mp9.py: your main code goes here, this is the ONLY FILE

you need to submittests.py: the tests in here (which are also called in

the notebook) are identical to the ones in the autograder. You SHOULD

NOT need to submit to gradescope to test your code.mp9.ipynb: you should complete the assignment by

working through the instructions in this python notebook. There is lots

of code in this notebook to help you along.data/: this folder contains some data for the last part

of the assignmentplanar_arm.py: some utilities for creating and drawing

a planar robot arm for use in the inverse kinematics portion of the

assignmentmain.py: you can ignore this code if you work

with the notebook, but this provides some utilities for running your

code and generating nice plots (once your code works). There are some

examples for how to run main.py in the notebook, and otherwise you can

find all relevant parameters by typing

python3 main.py --helpNotes:

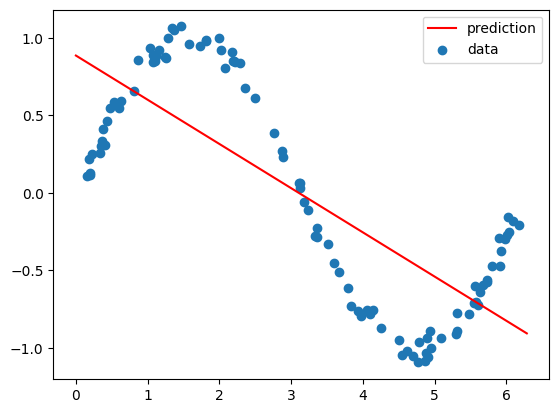

In this part of the assignment you will set up the necessary code for doing linear regression. Namely you will implement:

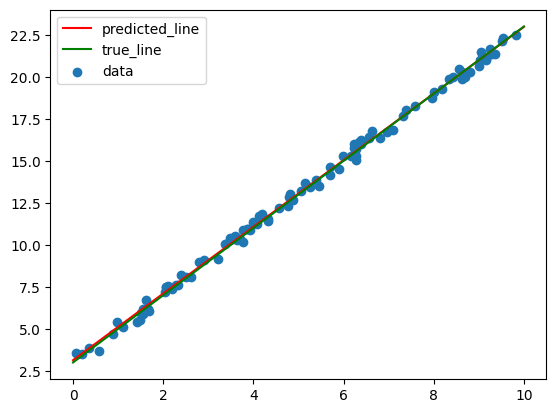

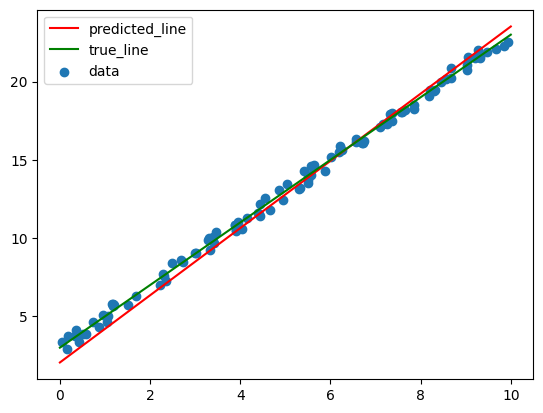

Now you will implement analytical linear regression which will compute parameters that minimize the mean squared error loss function

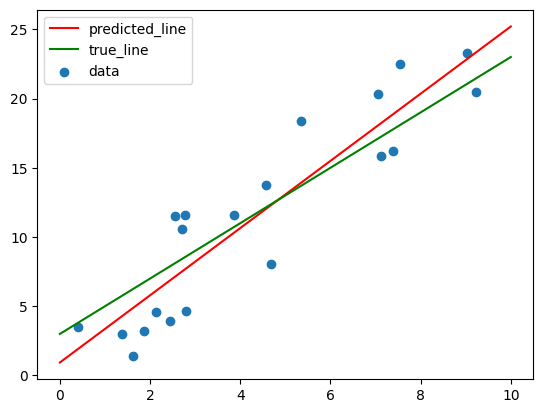

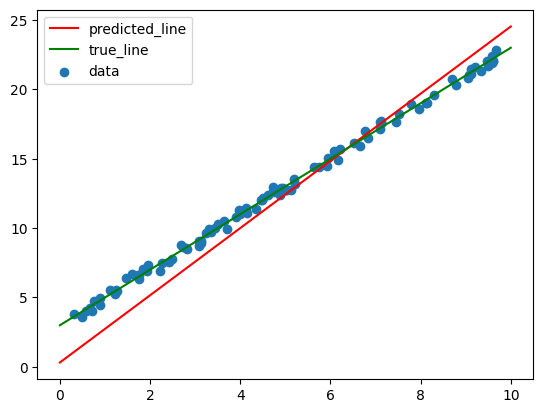

Now you will implement a very simple version of the gradient descent algorithm given a function which computes the gradient with respect to the parameters.

Next you will implement a variation of the previous algorithm which uses a random ordering of subsets of the data to iteratively update parameters, instead of using the entire dataset at once for estimating the gradient.

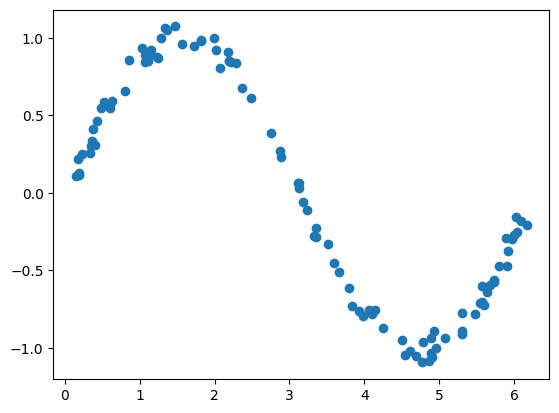

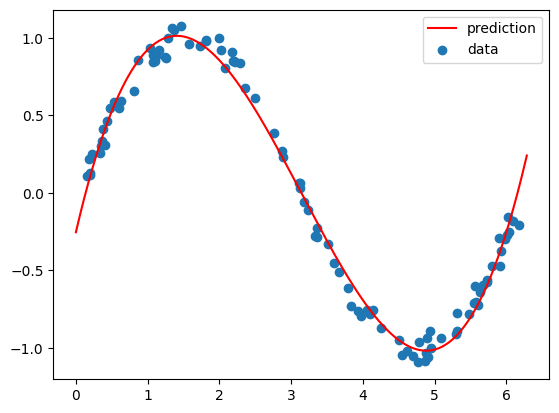

Now you will see how by transforming the input features into a new feature space (polynomial function of the input) you can use your previous linear regression code to fit a function to sine data.

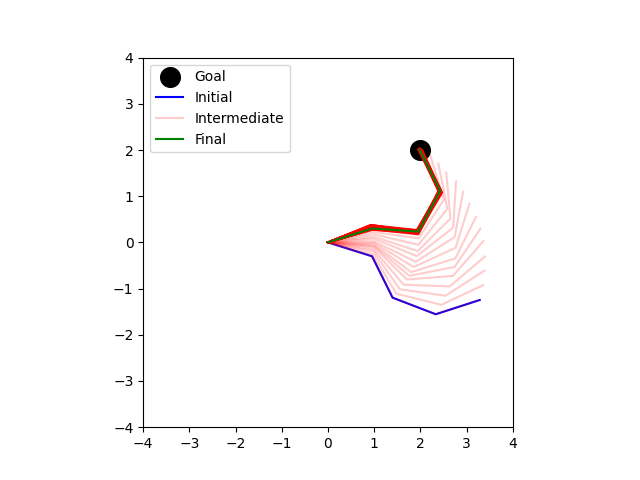

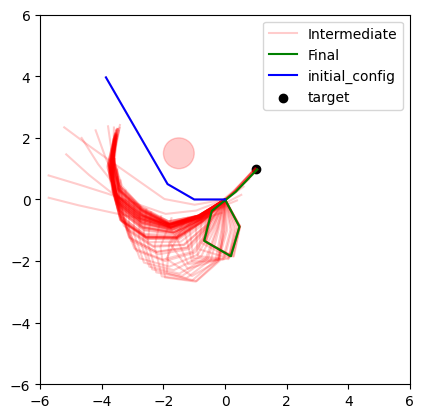

Now you will use gradient descent to iteratively update the configuration of a planar arm (meaning the joint angles) until the tip of the arm is in a desired position. You will estimate the direction for the update empirically, by sampling nearby configurations and choosing the local gradient direction.

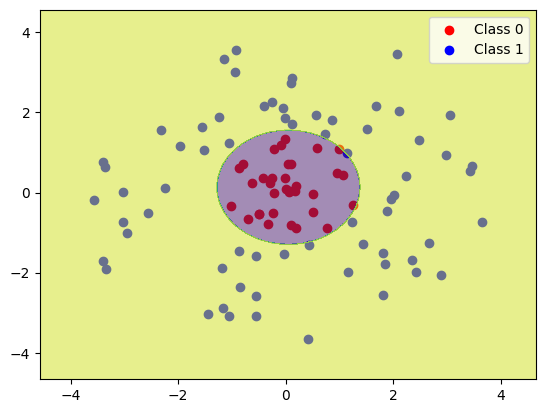

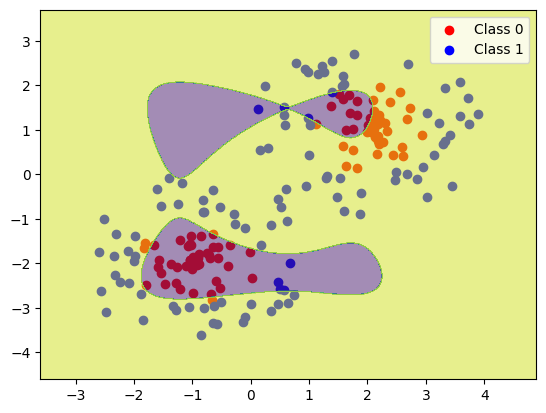

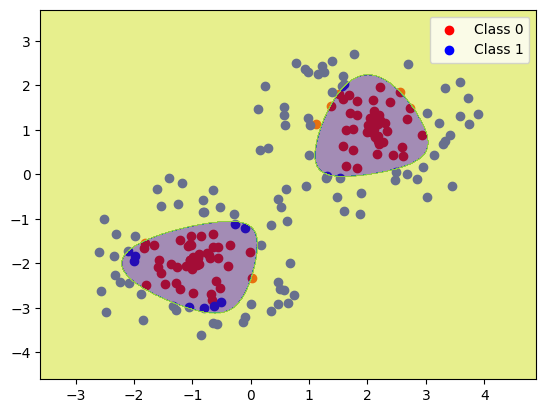

Finally, you will now do classification using gradient descent. This means implementing a new gradient for a new kind of model - logistic instead of linear. You will fit this model to data we provide (instead of you creating the data). Your current feature transforms (linear and polynomial) will be insufficient for fitting the third dataset we provide and so you will have to come up with your own feature transform.