|

CS440/ECE448 Fall 2023Assignment 10: Neural Nets and PyTorchDue date: Monday November 13th, 11:59pm |

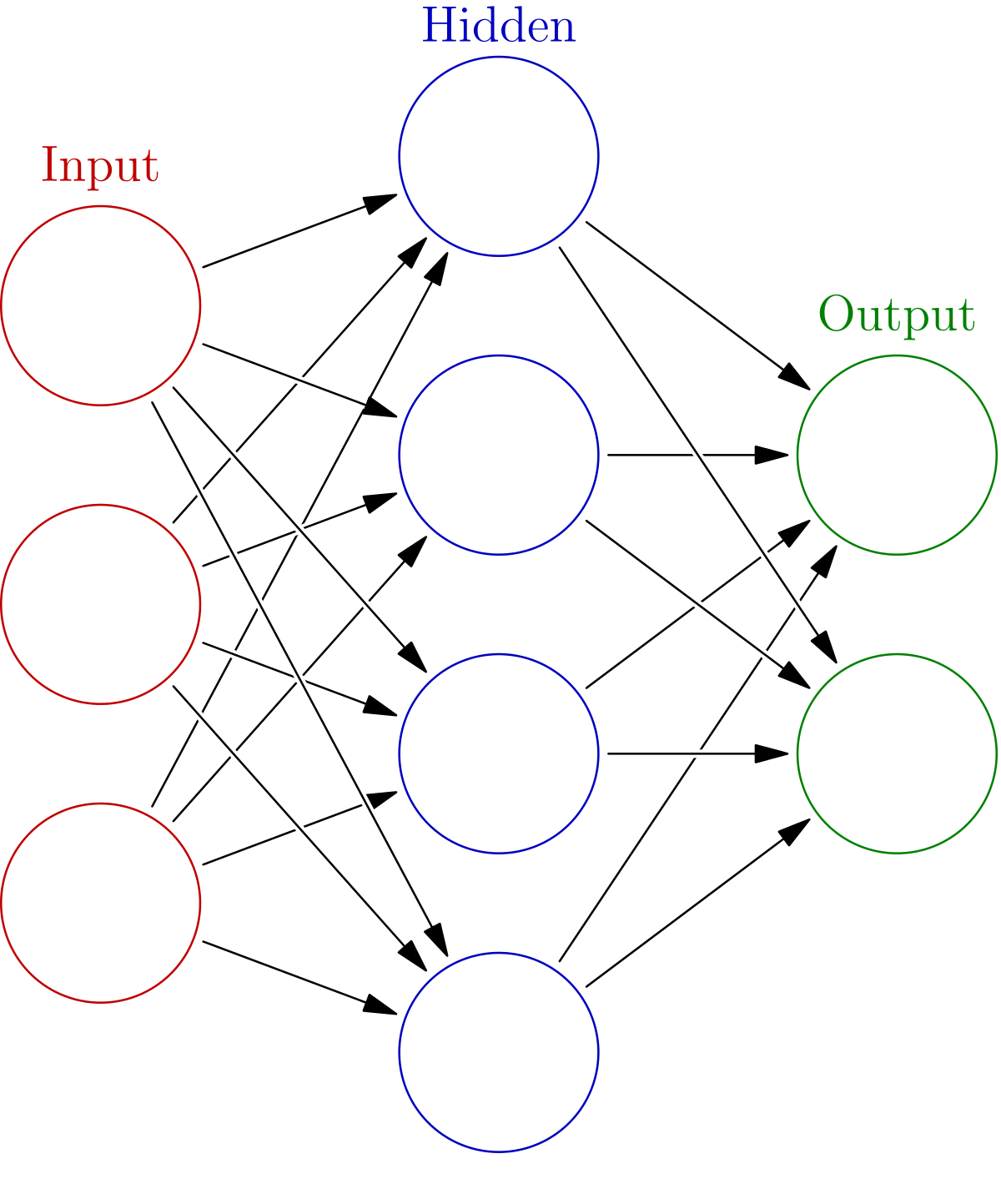

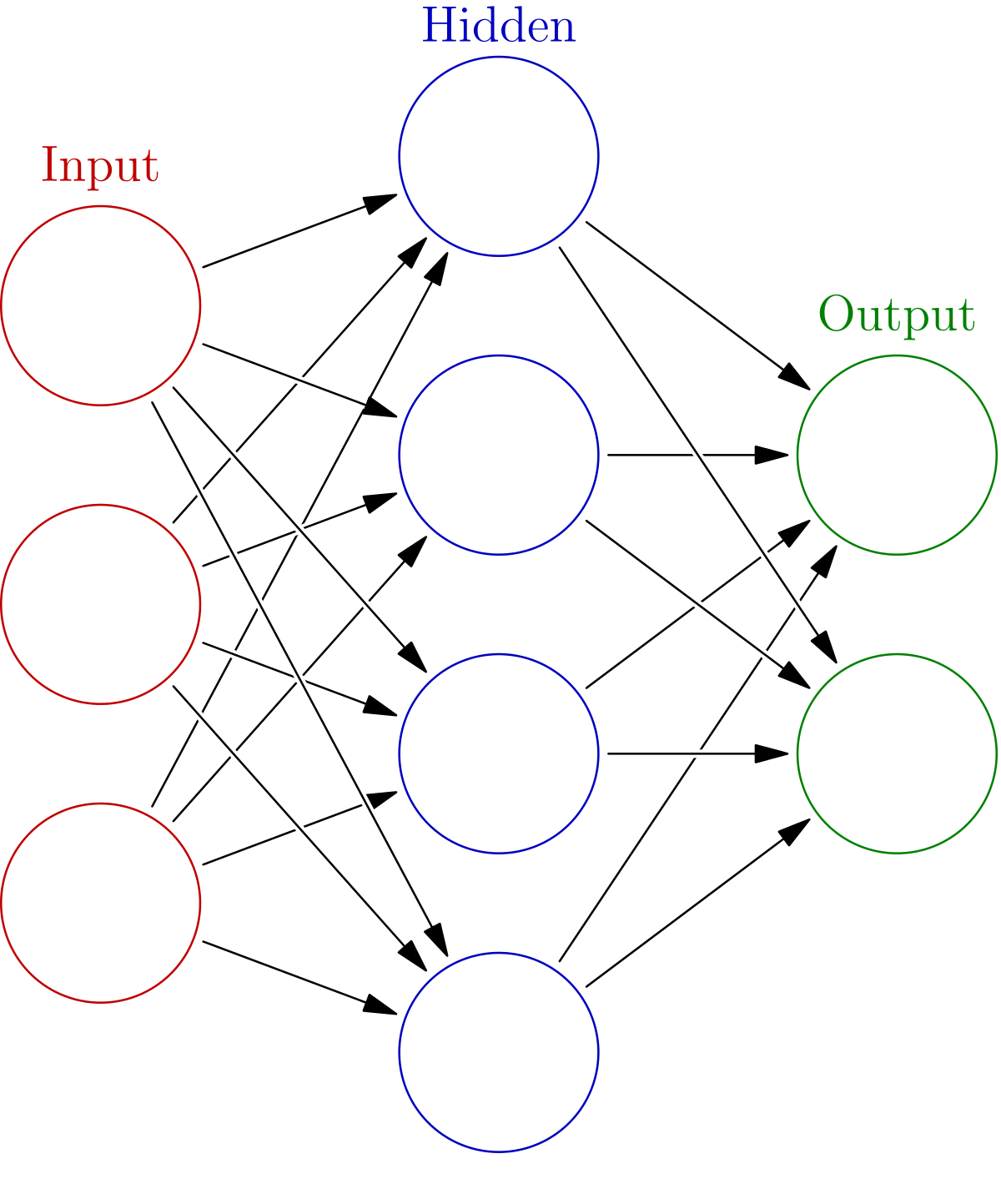

The goal of this assignment is to employ neural networks, nonlinear and multi-layer extensions of the linear perceptron, to classify images into four categories: ship, automobile, dog, or frog. That is, your ultimate goal is to create a classifier that can tell what each picture depicts.

You will improve your network from MP9 using modern techniques such as changing the activation function, changing the network architecture, or changing other initialization details.

You will be using the PyTorch and NumPy libraries to implement these models. The PyTorch library will do most of the heavy lifting for you, but it is still up to you to implement the right high-level instructions to train the model.

You will need to consult the PyTorch documentation, linked multiple times on this page, to help you with implementation details. We strongly recommend this PyTorch Tutorial, specifically the Training a Classifier section since it walks you thorugh building a classifier very similar to the one in this assignment. There are also other guides out there such as this one.

The template package contains your starter code and also your training and development datasets (packed into a single file).

In the code package, you should see these files:

Run python3 mp10.py -h in your terminal to find more about how to run the program.

There is one optional (ungraded) submission part where you can compete on a leaderboard with your best network using neuralnet_leaderboard.py. The binary files net.model and param_dict.state are generated when you run mp10.py --part 3 and are used to load in your best model submission, whose architecture must be defined in neuralnet_leaderboard.py This will be the model used to get your score on the leaderboard. The leaderboard is sorted by your performance on the hidden test set, but this correlates well with the dev set performance. The leaderboard is only for bragging rights and is worth no points.

You will need to import torch and numpy. Otherwise, you should use only modules from the standard python library. Do not use torchvision.

The autograder doesn't have a GPU, so it will fail if you attempt to use CUDA.

The dataset consists of 3000 31x31 colored (RGB) images (a modified subset of the CIFAR-10 dataset, provided by Alex Krizhevsky). This set is split for you into 2250 training examples (which are a mostly balanced sample of cars, boats, frogs and dogs) and 750 development examples.

The function load_dataset() in reader.py will unpack the dataset file, returning images and labels for the training and development sets. Each of these items is a Tensor.

In this part, you will try to improve your performance by employing modern machine learning techniques. These include, but are not limited to, the following:

Try to make your classification accuracy as high as possible, subject to the constraint that you may use at most 500,000 total parameters. This means that if you take every floating point value in all of your weights including bias terms, i.e. as returned by this pytorch utility function, you only use at most 500,000 floating point values.

You should be able to get an accuracy of at least 0.79 on the development set.

If you're using a convolutional net, you need to reshape your data in

the forward() method, and not the fit() method. The autograder will

call your forward function on data with shape (N,2883).

That's probably not what your CNN is expecting. It's very helpful to print out the

shape of key objects before/after each layer when trying to debug dimension issues. (the .view() method from tensors is very useful here)

Apparently it's still possible to be using a 32-bit environment. This may be ok. However, be aware that recent versions of PyTorch are optimized for a 64-bit environment and that is what Gradescope is using.

Ensure to use data standardization, as done in MP9.

For your own enjoyment, we have provided also an anonymous leaderboard for this MP. Even after you have full points on the MP, you may wish to try even more things to improve your performance on the hidden test set by tuning your network better, training it for longer, using dropouts, data augmentations, etc. For the leaderboard, you can submit the net.model and state_dict.state created with your best trained model (after running mp10.py --part 3) alongside neuralnet_leaderboard.py (note that these need not implement the same thing, as you could wish to do fancy things that would be too slow for the autograder). We will not train this specific network on the autograder, so if you wish to go wild with augmentations, costly transformations, more complex architectures, you're welcome to do so. Just do not exceed the 500k parameter limit, or your entry will be invalid. Also, please do not use additional external data for the sake of fairness (i.e. using a resnet backbone trained on ImageNet would be very unfair and counterproductive).