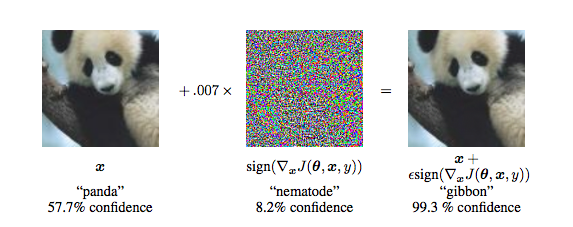

Current training procedures for neural nets still leave them excessively sensitive to small changes in the input data. So it is possible to cook up patterns that are fairly close to random noise but push the network's values towards or away from a particular output classification. Adding these patterns to an input image creates an "adversarial example" that seems almost identical to a human but gets a radically different classification from the network. For example, the following shows the creation of an image that looks like a panda but will be misrecognized as a gibbon.

from Goodfellow et al

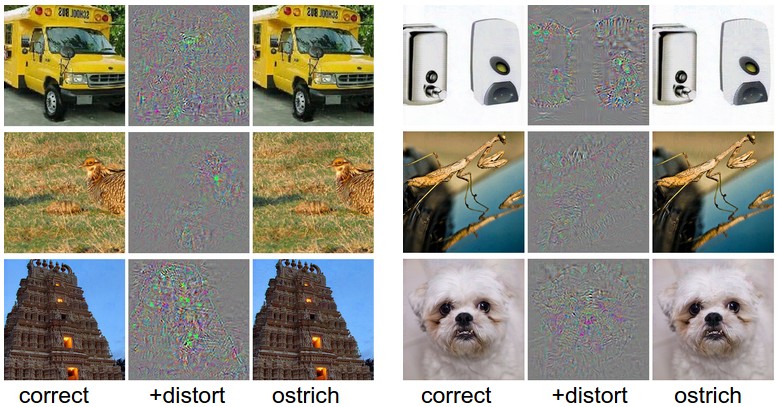

The pictures below show patterns of small distortions being used to persuade the network that images from six different types are all ostriches.

from Szegedy et al.

These pictures come from Andrej Karpathy's blog, which has more detailed discussion.

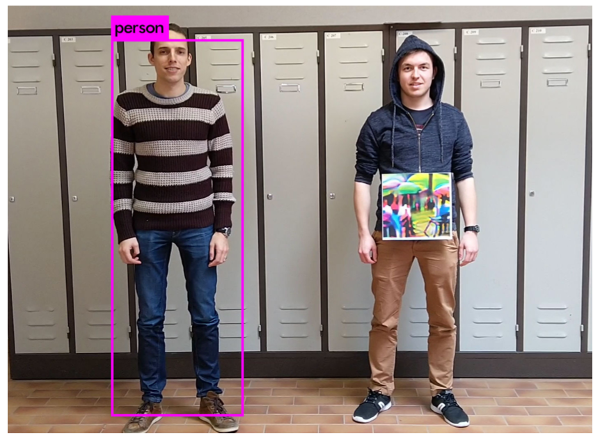

Clever patterns placed on an object can cause it to disappear, e.g. only the lefthand person is recognized in the picture below.

from

Thys, Van Ranst, Goedeme 2019

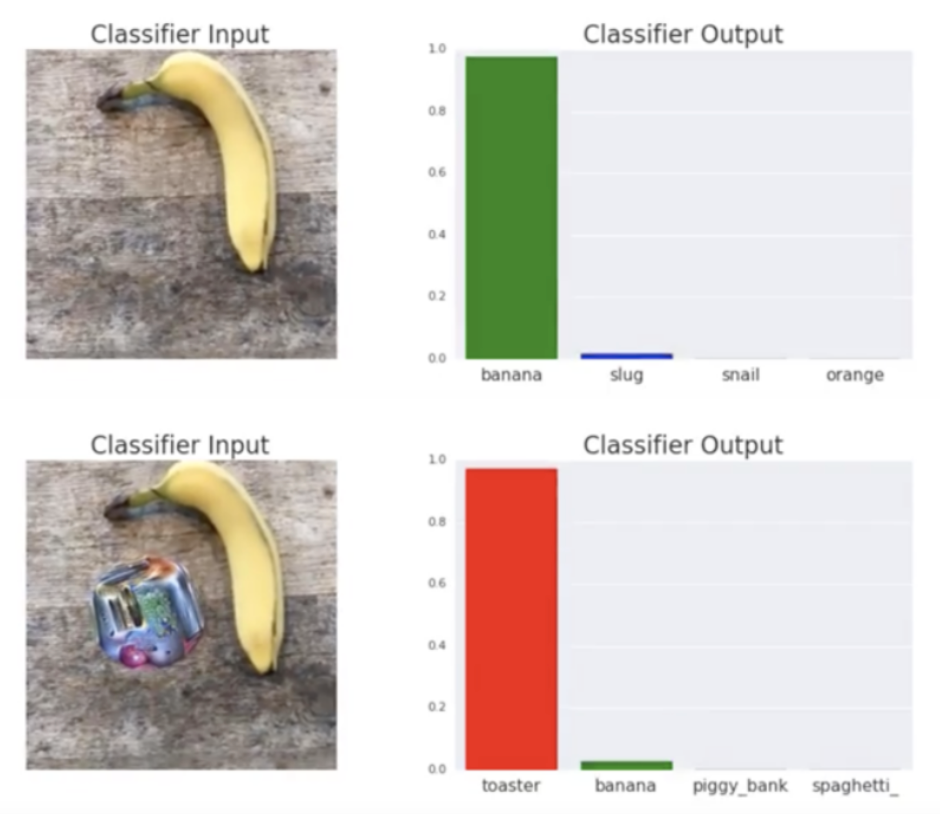

Disturbingly, the classifier output can be changed by adding a disruptive pattern near the target object. In the example below, a banana is recognized as a toaster.

from

Brown, Mane, Roy, Abadi, Gilmer, 2018

And here are a couple more examples of fooling neural net recognizers:

In the words of one researcher (David Forsyth), we need to figure out how to "make this nonsense stop" without sacrificing accuracy or speed. This is currently an active area of research.

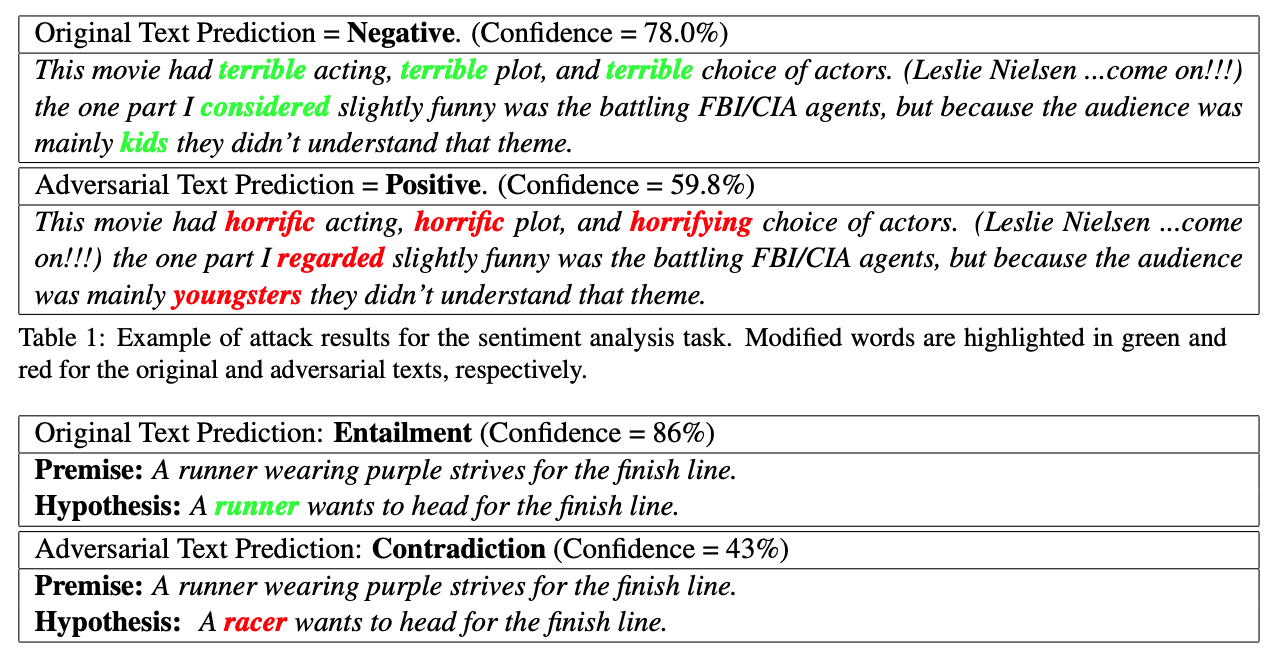

Similar adversarial examples can be created purely with text data. In the examples below, the output of a natural language classifier can be changed by replacing words with synonyms. The top example is from a sentiment analysis task, i.e. was this review positive or negative? The bottom example is from a textual entailment task, in which the algorithm is asked to decide how the two sentences are logically related. That is, does one imply the other? Does one contradict the other?

from

Alzantot et al 2018

A generative adversarial network (GAN) consists of two neural nets that jointly learn a model of input data. The classifier tries to distinguish real training images from similar fake images. The adversary tries to produce convincing fake images. These networks can produce photorealistic pictures that can be stunningly good (e.g. the dog pictures below) but fail in strange ways (e.g. some of the frogs below).

pictures from

New Scientist

article on

Andrew Brock et al research paper

Good outputs are common. However, large enough collections contain some catastrophically bad outputs, such as the frankencat below right. The neural nets seem to be very good at reproducing the texture and local features (e.g. eyes). But they are missing some type of high-level knowledge that tells people that, for example, dogs have four legs.

from

medium.com

generated using the tool

https://thiscatdoesnotexist.com/

A recent paper exploited this lack of anatomical understanding to detect GAN-generated faces using the fact that the pupils in the eyes had irregular shapes.

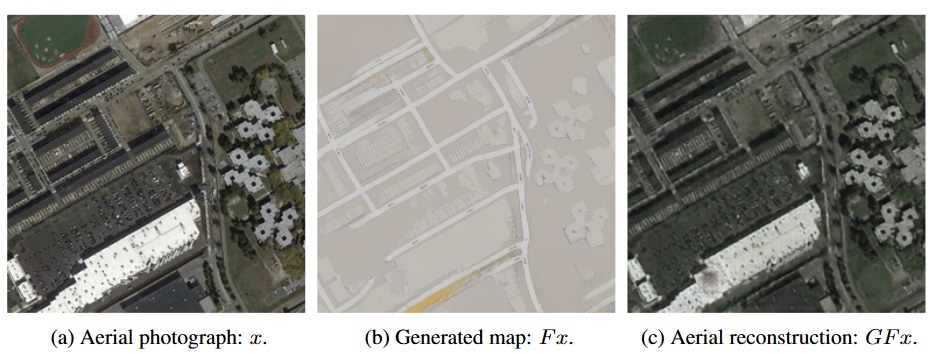

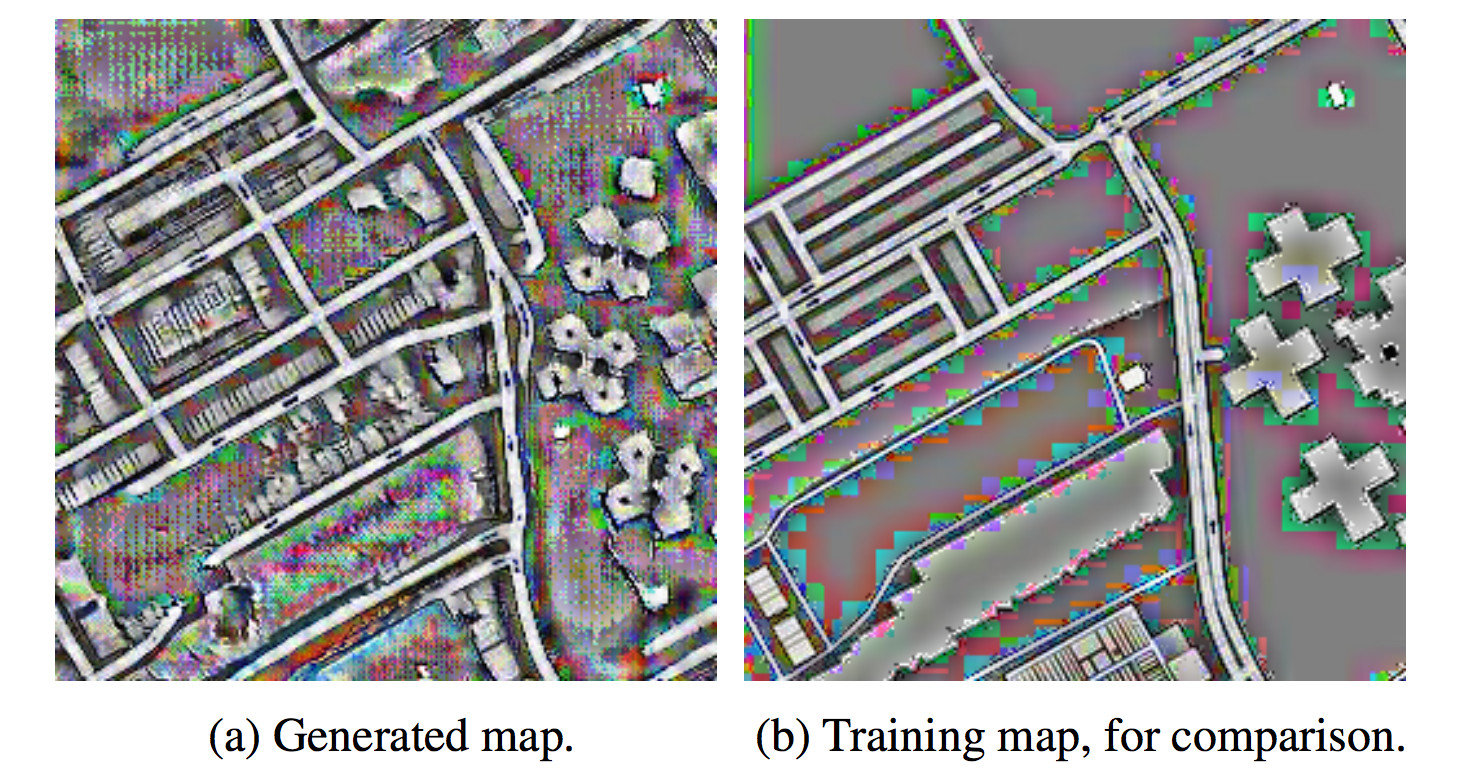

Another fun thing about GANs is that they can learn to hide information in the fine details of images, exploiting the same sensitivity to detail that enables adversarial examples. This GAN was supposedly trained to convert map into arial photographs, by doing a circular task. One half of the GAN translates pictures into arial photographs into maps and the other half translates maps into arial photographs. The output results below are too good to be true:

The map-producing half of the GAN is hiding information in the fine details of the maps it produces. The other half of the GAN is using this information to populate the arial photograph with details not present in the training version of the map. Effectively, they have set up their own private communication channel invisible to the researchers (until they got suspicious about the quality of the output images.).

More details are in this Techcrunch summary of Chu, Zhmoginov, Sandler, CycleGAN, NIPS 2017.