Core reasoning techniques

Application areas

Mathematical applications have largely become their own separate field, separate from AI. The same will probably happen soon to game playing. However, both applications played a large role in early AI.

We're doing to see popular current techniques (e.g. neural nets) but also selected historical techniques that may still be relevant.

Viewed in very general terms, the goal of AI is to build intelligent agents. An agent has three basic components:

Some agents are extremely simple, e.g. a Roomba:

Roomba (from IRobot)

We often imagine intelligent agents that have a sophisticated physical presence, like a human or animal. Current robots are starting to move like people/animals but have only modest high-level planning ability.

Boston dynamics robot ( video)

Other agents may live mostly or entirely inside the computer, communicating via text or simple media (e.g. voice commands). This would be true for a chess playing program or an intelligent assistant to book airplane tickets. A well-known fictional example is the HAL 9000 system from the movie "2001: A Space Odyssey" which appears mostly as a voice plus a camera lens (below).

(from Wikipedia) ( dialog) ( video)

When designing an agent, we need to consider

Many AI systems work well only because they operate in limited environments. A famous sheep-shearingn robot from the 1980's depended on the sheep being tied down to limit their motion. Face identification systems at security checkpoints may depend on the fact that people consistently look straight forwards when herded through the gates.

Simple agents like the Roomba may have very direct connections between sensing and action, with very fast response and almost nothing that could qualify as intelligence. Smarter agents may be able to spend a lot of time thinking, e.g. as in playing chess. They may maintain an explicit model of the world and the set of alternative actions that it is choosing between.

AI researchers often plan to build a fully-featured end-to-end system, e.g. a robot child. However, most research projects and published papers consider only one small component of the task, e.g.

Over the decades, AI algorithms have gradually improved. Two main reasons:

The second has been the most important. Some approaches popular today were originally proposed in the 1940's but could not be made to work without sufficient computing power.

AI has cycled between two general approaches to algorithm design:

Most likely neither extreme position is correct.

AI results have consistently been overly hyped, sometimes creating major tension with grant agencies.

No clear notion of a computer (though interesting philosophy)

First computers (Atanasoff 1941, Eniac 1943-44), able to do almost nothing.

ENIAC, mid 1940's (from

Wikipedia)

ENIAC, mid 1940's (from

Wikipedia)

On-paper models that are insightful but can't be implemented. Some of these foreshadow approaches that work now.

Computers with functioning CPU but (by today's standards) very slow with absurdly little memory. E.g. the IBM 1130 (1965-72) came with up to 11M of main memory. The VAX 11/780 had many terminals sharing one CPU.

IBM 1130, late 1960's (from

Wikipedia)

IBM 1130, late 1960's (from

Wikipedia)

Graphis mostly used pen plotters, or perhaps graphics paper that had to be stored in a fridge. Mailers avoided linux gateways because they were unreliable. Prototypes of great tools like the mouse, GUI, Unix, refresh-screen graphics.

AI algorithms are very stripped down and discrete/symbolic. Chomsky's Syntactic Structures (1957) proposed very tight constraints on linguistic models in an attempt to make them learnable from tiny datasets.

Computers now look like tower PC's, eventually also laptops. Memory and disk space are still tight. Horrible peripherals like binary (black/white) screens and monochrome surveillance cameras. HTTP servers and internet browsers appear in the 1990's:

Lisp Machine, 1980's

(from Wikipedia

Lisp Machine, 1980's

(from Wikipedia

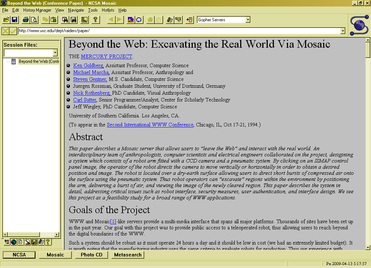

NCSA Mosaic, 1990's

(from Wikipedia)

NCSA Mosaic, 1990's

(from Wikipedia)

AI starts to use more big-data algorithms, especially at sites with strong resources. First LDC (linguistic data consortium) datasets: 1993 Switchboard 1, TIMIT. Fred Jelinek starts to get speech recognition working usefully using statistical ngram models. Linguistic theories were still very discrete/logic based. Jelinek famously said in the mid/late 1980's: "Every time I fire a linguist, the performance of our speech recognition system goes up."

Modern computers and internet.

Algorithms achieve strong performance by gobbling vast quantities of training data, e.g. Google's algorithms use the entire web to simulate a toddler. Neural nets "learn to the test," i.e. learn what's in the dataset but not always with constraints that we assume implicitly as humans. So strange unexpected lossage ("adversarial examples"). Speculation that learning might need to be guided by some structural constraints.

("Three men drinking tea" by a Microsoft AI program,

from New Scientist, 2019)

("Three men drinking tea" by a Microsoft AI program,

from New Scientist, 2019)

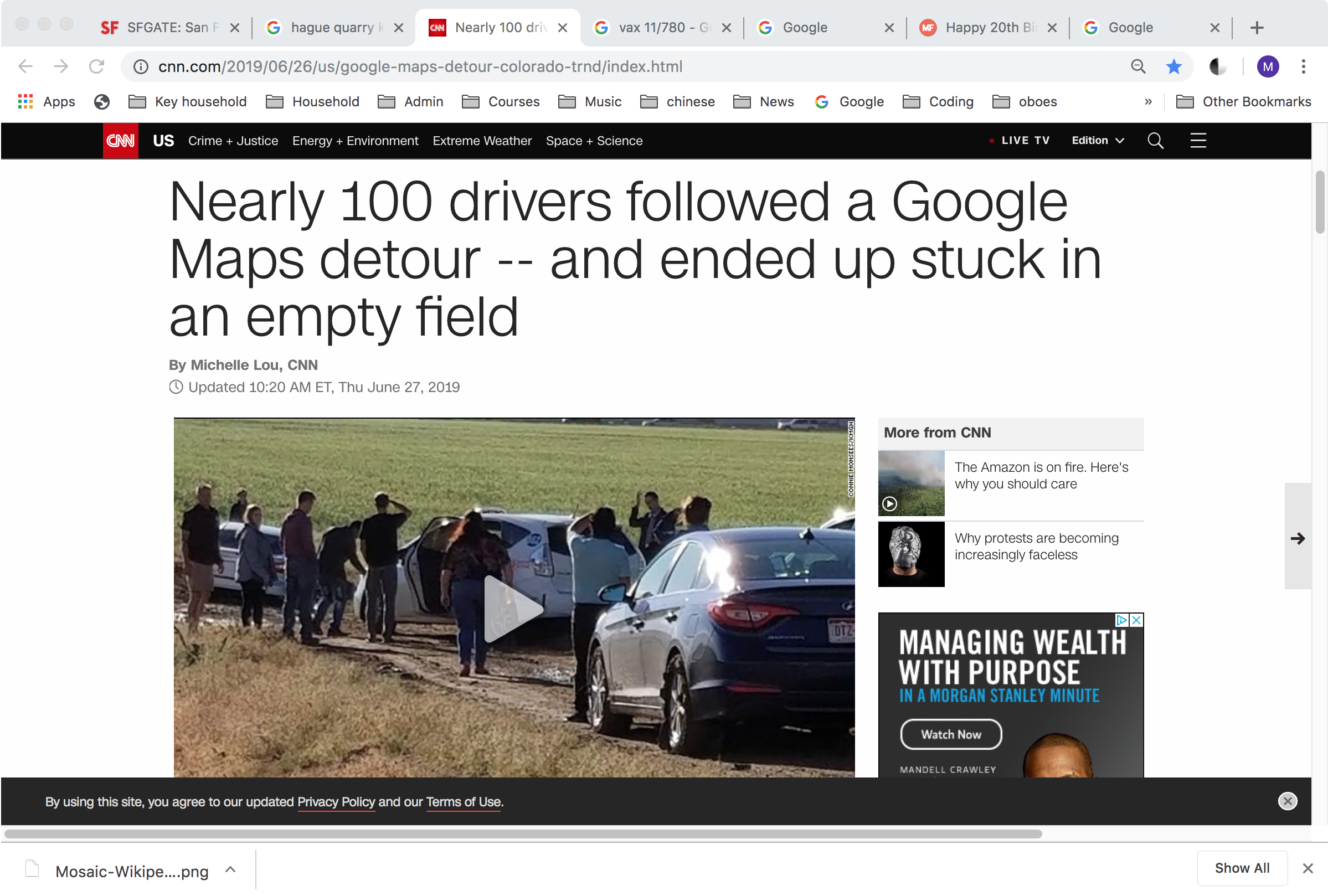

(from CNN, 2019)

(from CNN, 2019)

Boston Dynamics robot falling down (from

The Guardian, 2017)

Boston Dynamics robot falling down (from

The Guardian, 2017)