The basic task is

Input: a collection of objects of several types

Output: correct type label for each object

In practice, this means

Input: a collection of objects of several types

Extract features from each object

Output: Use the features to label each object with its correct type

For example, we might wish to take a collection of documents (i.e. text files) and classify each document as

Let's look at the British vs. American classification task. Certain words are characteristic of one dialect or the other. For example

See this list of British and American words.

The word "boffin" differs from "egghead" because it can easily be modified to make words like "boffinry" and "astroboffin".

Dialects have special words for local foods, e.g. the South African English words "biltong," "walkie-talkie," "bunny chow."

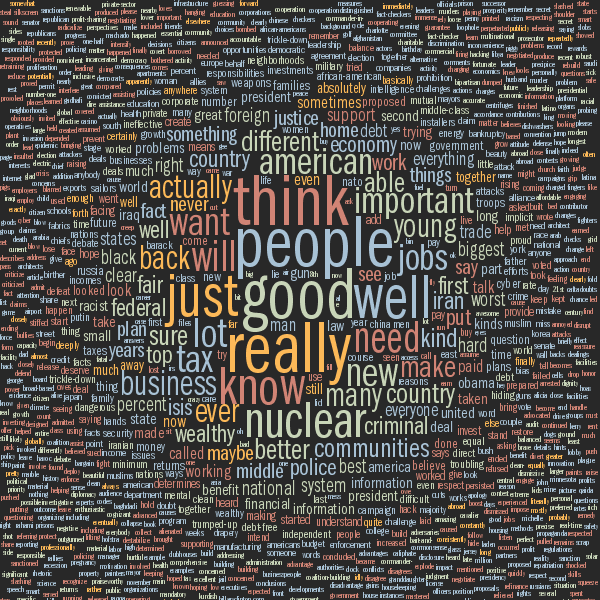

The word clouds at the top of this page show which words (content words only) were said most by Clinton (left) and Trump (right) in their first debate.

See more details at Martin Krzywinski's

Word Analysis of 2016 Presidential Debates

A bag of words model determines the class of a document based on how

frequently each individual word occurs. It ignores the order in which

words occur, which ones occur together, etc. So it will miss some

details, e.g. the difference between "poisonous" and "not poisonous,"

Suppose that cause C produces n observable effects \( E_1,...,E_n \).

Then our Naive Bayes model calculates the MAP estimate as

Let's map this idea over to our Bag of Words model of text classification.

Suppose our input document contains the string of words

\( W_1, ..., W_n \)

Notice that some of the words might be identical.

E.g the sentence "The big cat saw the small cat" contains

two pairs of repeated words.

For example, \(W_2\) is "cat" and

\(W_7\) is also "cat".

Our estimation process will consider the two copies of "cat" separately

Jargon:

So our example sentence has seven tokens drawn from five types.

To classify the document, naive Bayes compares these two values

To do this, we need to estimate two types of parameters

Naive Bayes works quite well in practice, especially when coupled with a number

of tricks that we'll see later.

The Naive Bayes spam classifier SpamCop, by Patrick Pantel and Dekang Lin (1998) got down to a

4.33% error rate using bigrams and some other tweaks.

Suppose we have n word types, then creating our bag of words model

requires estimating O(n) probabilities. This is much smaller

than the full conditional probability table which has \(2^n\)

entries.

The big problem with too many parameters is having enough training

data to estimate them reliably. (I said this last lecture but it

deserves a repeat.)

A Quantitative Analysis of Lexical Differences Between Genders in Telephone Conversations

Constantinos Boulis and Mari Ostendorf, ACL 2005

Fisher corpus of more than 16,000 transcribed telephone conversations (about 10 minutes each).

Speakers were told what subject to discuss.

Can we determine gender of the speaker from what words they used?

Most frequent words in any spoken corpus are very common words that are usually

ignored in estimation, called "stop words." These include

Stop words are often omitted in classification, because they don't

tell us much about the topic. However, this is task dependent. Stop

words can provide useful information about the speaker's mental state,

relation to the listener, etc.

See

James Pennebaker "The secret life of pronouns".

Words most useful for discriminating speaker's gender (i.e. used much more by men than women

or vice versa)

This paper compares the accuracy of Naive Bayes to that of an SVM

classifier, which is a more sophisticated classification technique.

The more sophisticated classifier makes an improvement, but not a dramatic one.

Text classification with Naive Bayes.

\( P(C | E_1,...E_n) \ \propto\ P(E_1|C) * ....* P(E_n|C) * P(C) \

= \ P(C) * \prod_{k=1}^n P(E_k|C) \)

\(P(\text{UK} | W_1, \ldots, W_n) \ \propto\ P(\text{UK}) * \prod_{k=1}^n P(W_k | \text{UK} ) \)

\( P(\text{US} | W_1, \ldots, W_n) \ \propto\ P(\text{US}) * \prod_{k=1}^n P(W_k | \text{US})\)

Headline results

Gender classification example