Classifiers turn sets of input features into labels. At a very high level:

Classifiers can be used to classify static objects and to make decisions.

We've seen a naive Bayes classifier used to identify the type of text, e.g. label extracts such as the following as math text, news text, or historical document.

Let V be a particular set of vertices of cardinality n. How many different (simple, undirected) graphs are there with these n vertices? Here we will assume that we are naming the edges of the graph using a pair of vertices (so you cannot get new graphs by simply renaming edges). Briefly justify your answer. (from CS 173 study problems)

Senate Majority Leader Mitch McConnell did a round of interviews last week in which he said repealing Obamacare and making cuts to entitlement programs remain on his agenda. Democrats are already using his words against him. (from CNN, 21 October 2018)

Congress shall make no law respecting an establishment of religion, or prohibiting the free exercise thereof; or abridging the freedom of speech, or of the press; or the right of the people peaceably to assemble, and to petition the Government for a redress of grievances.

Classifiers can also be used label low-level input. For example, we can label what kind of object is in a picture. Or we can identify what phone has been said in each timeslice of a speech signal.

from Alex Krizhevsky's web page

"computer"

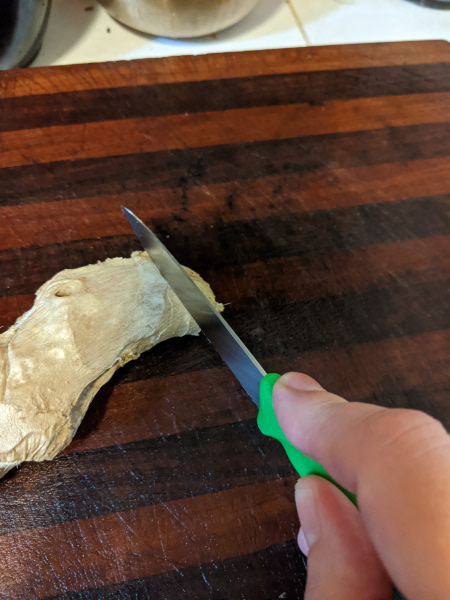

Classifiers can also be used to make decisions. For example, we might examine the state of a video game and decide whether to move left, move right, or jump. In the pictures below, we might want to decide if it's safe to move forwards along the path or predict what will happen to the piece of ginger.

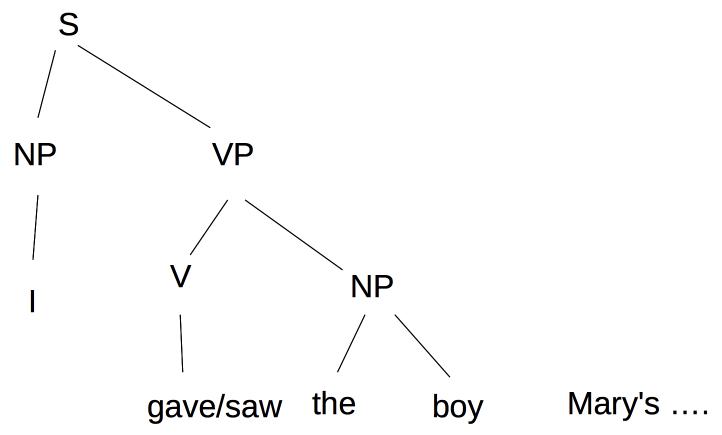

Classifiers may also be used to make decisions internally to an AI system. Suppose that we have constructed the following parse tree and the next word we see is "Mary's".

We have to decide how to attach "Mary's" into the parse tree. In "I gave the boy Mary's hat," "Mary's hat" will be attached to the VP as a direct object. In "I saw the boy Mary's going out with," the phrase "Mary's going out with" is a modifier on "boy." The right choice here depends on the verb (gave vs. saw) and lookahead at the words immediately following "Mary's".

Current algorithms require a clear (and fairly small) set of categories to classify into. That's largely what we'll assume in later lectures. But this assumption is problematic, and could create issues for deploying algorithms into the unconstrained real world.

For example, the four objects below are all quite similar, but nominally belong to three categories (peach, Asian pear, and two apples). How specific a label are we looking for, e.g. "fruit", "pear", or "Asian pear"? Should the two apples be given distinct labels, given that one is a Honeycrisp and one is a Granny Smith? Is the label "apple" correct for a plastic apple, or an apple core? Are some classification mistakes more/less acceptable than others? E.g. mislabelling the Asian pear as an apple seems better than calling it a banana, and a lot better than calling it a car.

What happens if we run into an unfamiliar type of object? Suppose, for example, that we have never seen an Asian pair. This problem comes up a lot, because people can be surprisingly dim about naming common objects, even in their first language. Do you know what a parsnip looks like? How about a plastron? Here is an amusing story about informant reliability from Robert M. Laughlin (1975, The Great Tzotzil Dictionary). In this situation, people often use the name of a familiar object, perhaps with a modifier, e.g. a parsnip might be "like a white carrot but it tastes different." Or they may identify the general type of object, e.g. "vegetable."

Some unfamiliar objects and activities can be described in terms of their components. E.g. the wind chime below has a big metal disk and some mysterious wood parts. The middle picture below is "a house with a shark sticking out of it." The bottom picture depicts ironing under water.

shark from

Wikipedia,

diver from

Sad and Useless

When labelling objects, people use context as well as intrinsic properties. This can be particularly important when naming a type of object whose appearance can vary substantially, e.g. the vases in the picture below.

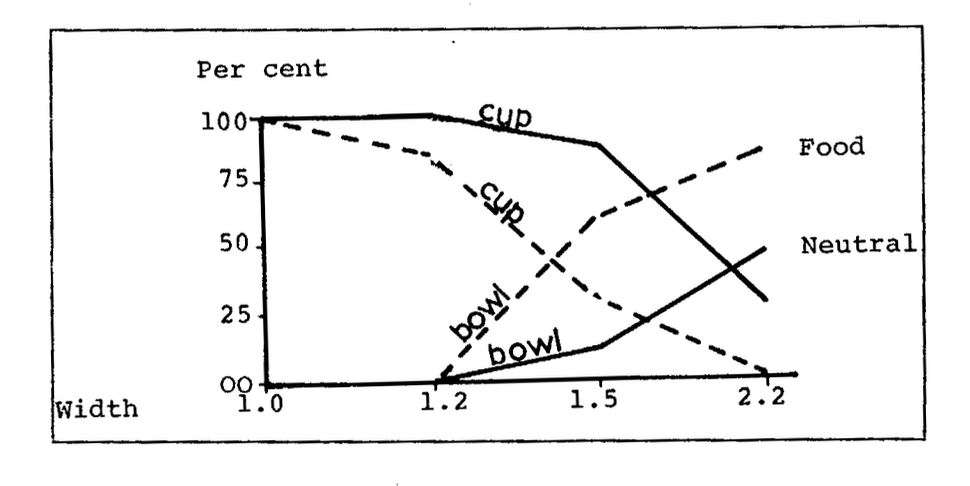

A famous experiment by William Labov (1975, "The boundaries of words and their meanings") showed that the relative probabilities of two labels (e.g. cup vs. bowl) change gradually as properties (e.g. aspect ratio) are varied. For example, the tall container with no handle (left) is probably a vase. The round container on a stem (right) is probably a glass. But what are the two in the middle?

The very narrow container was sold as a vase. But filling it with liquid would encourage people to call it a glass (perhaps for a fancy liquor). Installing flowers in the narrow wine glass makes it seem more like a vase. And you probably didn't worry when I called it a "vase" above. The graph below (from the original paper) shows that imagining a food context makes the same picture more likely to be labelled as a bowl rather than a cup.

With complex scenes, it's not clear how many objects to explicitly call out. E.g. is the picture below showing a garden or a street? Are we supposed to be labelling each plant? Should we mark out the street lamp? The sidewalk?

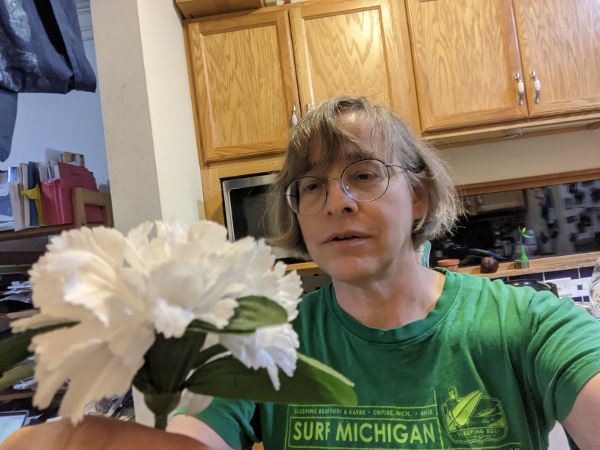

When you're talking to another human, it's much more clear what aspects of the scene are important. First, there is usually some larger context that the conversation fits into (e.g. "gardening" or "traffic"). Second, people look at things they are interested in, e.g. compare the two pictures below. People have very strong ability to track another person's gaze as we watch what they are doing. Because of their close association with humans, the same applies to dogs. Using gaze to identify interest is believed to be an integral part of how children associate names with objects. Lack of appropriate eye contact is one reason that conversations feel glitchy on video conferencing systems.

It's tempting to see this problem as specific to computer vision. However, there are similar examples from other domains. E.g. in natural language processing, it's common to encounter new words. These can be unfamiliar proper nouns (e.g. "Gauteng", "covid"). Or they may be similar to familiar words, e.g. typos ("coputer") or new compounds ("astroboffin") or melds ("brexit", "splinch"). Phone recognition may involve more or less detail and mistakes may be minor (e.g. "t" vs. "d") or major (e.g. "t" vs. "a"). And people use prosodic features (e.g. length, stress) to indicate which words are most important for the listener to pay attention to.