CS440/ECE448 Fall 2020

Assignment 7: Reinforcement Learning

Deadline: Wednesday, December 9th, 11:59:59PM

Created by Ningkai Wu, Yiran Kang

Updated by Ruicheng Xian, Gao Tang, Xiaoming Zhao

Image from Wikipedia

Snake is a famous video game originated in the 1976 arcade game Blockade. The player uses up, down, left and right to control the snake which grows in length (when it eats the food pellet), with the snake body and walls around the environment being the primary obstacle. In this assignment, you will train AI agents using reinforcement learning to play a simple version of the game snake. You will implement a TD version of the Q-learning algorithm.

Contents

- Provided Snake Environment

- Q-learning Agent

- Training your Agent

- Debugging Examples

- Provided Code and Submission

Provided Snake Environment

Snake

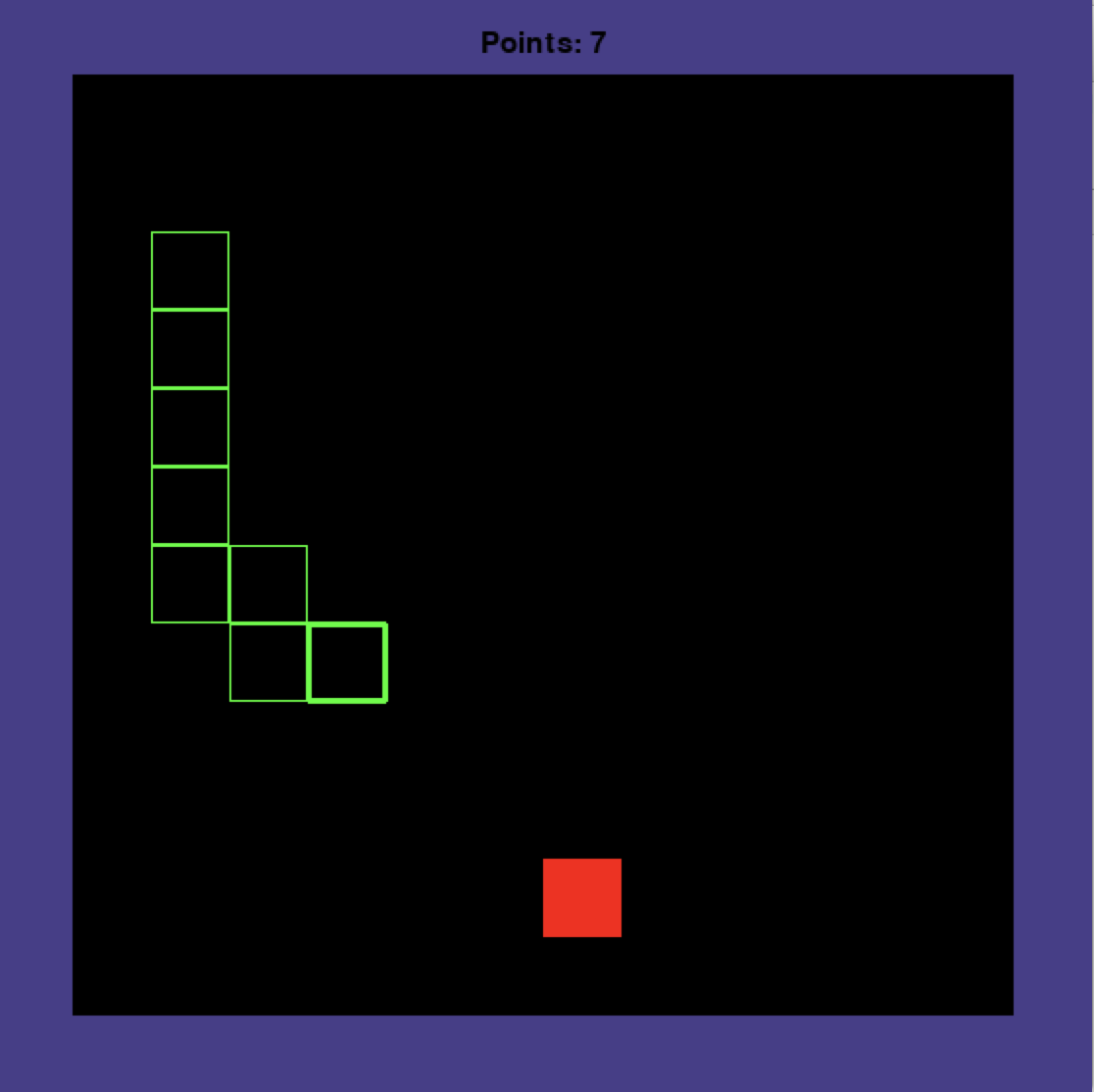

In this assignment, the size of the entire game board is 560x560. The size for every side of wall (filled with blue) is 40. The snake head, body segment and food pellet have the same size of 40x40. Snake moves with a speed of 40 per frame. In this setup, the entire board that our snake agent can move has a size of 480x480 and can be treated as a 12x12 grid.

The green rectangle is the snake agent and the red rectangle is the food pellet. The snake head is marked with a thicker boarder for easier recognition. When a food pellet is eaten, a new food pellet is generated randomly on the board. Every time it eats a food pellet, the points increases by 1 and its body grows one segment. The snake starves after taking 1568 > 8*12*12 steps without food.

Before implementing the Q-learning algorithm, we must first define Snake as a Markov Decision Process (MDP). In Q-learning, state variables do not represent the full details of the environment. They are a simplified represention containing enough information to let the agent make decisions. The smaller the state space, the more quickly the agent will be able to explore it all. So, when your agent is given the environment state, you need to convert it to the state space as defined below.

Each state in the MDP is a tuple (adjoining_wall_x, adjoining_wall_y, food_dir_x, food_dir_y, adjoining_body_top, adjoining_body_bottom, adjoining_body_left, adjoining_body_right).

-

[adjoining_wall_x, adjoining_wall_y] tells whether there is wall next to snake head. It has 9 states:

- adjoining_wall_x: 0 (no adjoining wall on x axis), 1 (wall on snake head left), 2 (wall on snake head right)

- adjoining_wall_y: 0 (no adjoining wall on y axis), 1 (wall on snake head top), 2 (wall on snake head bottom)

-

[food_dir_x, food_dir_y] tells the direction of food to snake head. It has 9 states:

- food_dir_x: 0 (same coords on x axis), 1 (food on snake head left), 2 (food on snake head right)

- food_dir_y: 0 (same coords on y axis), 1 (food on snake head top), 2 (food on snake head bottom)

-

[adjoining_body_top, adjoining_body_bottom, adjoining_body_left, adjoining_body_right] checks if there is snake body in adjoining square of snake head. It has 16 states:

- adjoining_body_top: 1 (adjoining top square has snake body), 0 (otherwise)

- adjoining_body_bottom: 1 (adjoining bottom square has snake body), 0 (otherwise)

- adjoining_body_left: 1 (adjoining left square has snake body), 0 (otherwise)

- adjoining_body_right: 1 (adjoining right square has snake body), 0 (otherwise)

Actions: Your agent's actions are chosen from the set {up, down, left, right}. The coresponding indices used inside the program are 0,1,2,3.

Rewards:

- +1 when your action results in getting the food pellet. (The snake head position is the same as the food pellet position).

- -1 when the snake dies, that is when snake head hits the wall, its body segment or the head tries to move towards its adjacent body segment (moving backwards), or starves.

- -0.1 otherwise (does not die nor get food).

Q-learning Agent

Trained Agent

You will create a snake agent to learn how to get as many food pellets as possible without dying. In order to do this, you must use Q-learning. Implement the TD Q-learning algorithm and train it on the MDP outlined above.

$$ {Q(s,a) \leftarrow Q(s,a) + \alpha(R(s)+\gamma \max_{a'}Q(s',a')-Q(s,a))}$$

During training, the learning rate \(\alpha\) should decay as C/(C+N(s,a)), where N(s,a) is the number of times you have seen the given the state-action pair.

You must use the exploration policy defined in Russell and Norvig. Specifically:

$$ a = \text{argmax}_{a}\ \ f(\ Q(s,a), N(s,a)\ )$$

N(s,a) is the number of times we've taken action a in state s. f(u,n) returns 1 if n is less than a tuning parameter Ne, otherwise it returns u. In plain English, this means that the agent will choose an action that hasn't yet been explored enough times. If there are no such actions, it will choose the action with the highest q value.

Ties in this policy must be broken using the priority order right > left > down > up.

Training your agent

During training, your agent needs to update your Q-table, then get the next action using the above exploration policy, and then update N-table with that action. The first step is skipped when the initial state and action are None. If the game is over, that is when the dead variable becomes true, you only need to update your Q table and reset the game. During testing, your agent only needs to give the best action using Q-table.

Train your agent for as long as you deem necessary, counting the average number of points your agent can get. Your average over 1000 test games should be at least 20. Once you have this working, you can try to improve performance by adjusting the parameters \(\gamma\), Ne, and C. Do not hardcode any of these parameter settings, because some of the autograder's tests will pass in specific values for them.

The autograder will initialize the environment with various values for the initial snake and food pellet positions. The first random choice by the environment happens when it chooses a position for the second food pellet. So your Q-table should exactly match ours through the point when the first food pellet is eaten.

Tips:

- Initially, all the Q value estimates should be 0.

- In a reasonable implementation, you should see your average points increase in seconds.

- You can run python mp7.py --human to play the game yourself.

Debugging Examples

To make your debugging easier we provide three debug examples of Q- and N-tables for you, included in the skelecton code's data folder. Each Q- and N-table is generated exactly after snake eats the first food in training process. More specifically, it's the first time when snake reaches exactly 1 point in training. At that point, mp7.py saves a file checkpoint.npy containing the Q table and a file checkpoint_N.npy containing the N table. You can use diff or python code to compare these to the model table for each example below:

- [Debug Example 1] snake_head_x=200, snake_head_y=200, food_x=80, food_y=80, Ne=40, C=40, gamma=0.7 data/checkpoint1.npy

- [Debug Example 2] snake_head_x=200, snake_head_y=200, food_x=80, food_y=80, Ne=20, C=60, gamma=0.5 data/checkpoint2.npy

- [Debug Example 3] snake_head_x=120, snake_head_y=120, food_x=320, food_y=320, Ne=30, C=30, gamma=0.6 data/checkpoint3.npy

Making sure you can pass these debug examples will help you a lot for passing this part of the autograder.

Provided Code Skeleton

We have provided skeleton.zip containing the supplied code (described below) and the debugging examples described above. Do not import any non-standard libraries except pygame (pygame version 1.9.6) and numpy.

- mp7.py - This is the main file that starts the program. This file runs the snake game with your implemented agent acting in it. The code runs a number of training games, then a number of testing games, and then displays example games at the end.

- snake.py - This is the file that defines the snake environment and creates the GUI for the game.

- utils.py - This is the file that defines some of the discretization constants as defined above and contains the functions to save and load models.

- agent.py This is the file where you will be doing all of your work. This file contains the Agent class. This is the agent you will implement to act in the snake environment. Below is the list of instance variables and functions in the Agent class.

You should submit the file agent.py on gradescope. Do not modify the other provided files.

Inside agent.py, you will find the following methods:

- self._train: This is a boolean flag variable that you should use to determine if the agent is in train or test mode. In train mode, the agent should explore(based on exploration function) and exploit based on the Q table. In test mode, the agent should purely exploit and always take the best action.

- train(): This function sets the self._train to be True. This is called before the training loop is run in mp7.py

- test(): This function sets the self._train to be False. This is called before the testing loop is run in mp7.py

- save_model(): This function saves the self.Q table. This is called after the training loop in mp7.py.

- load_model(): This function loads the self.Q table. This is called before the testing loop in mp7.py.

- act(state, points, dead): This is the main function you will implement and is called repeatedly by mp7.py while games are being run.

Inside act(state,points,dead):

- "state" is the state from the snake environment and is a list of [snake_head_x, snake_head_y, snake_body, food_x, food_y](Notice that in act function, you first need to discretize this into the state configuration we defined above).

- "points" is the number of food the snake has eaten.

- "dead" is a boolean indicating if the snake is dead. "points", "dead" should be used to define your reward function.

- act should return a number from the set of {0,1,2,3}. Returning 0 will move the snake agent up, returning 1 will move the snake agent down, and returning 2 will move the agent left, returning 3 will move the agent right.

- If self._train is True, act should update the Q table before returning the chosen action. If self._train is False, the agent should simply return the best action based on the Q table.