from Wikipedia

from Wikipedia

What do words mean?

Why do we care?

What are they useful for?

In Russell and Norvig style: what concepts does a rational agent use to do its reasoning?

Seen from a neural net perspective, the key issue is that one-hot representations of words don't scale well. E.g. if we have a 100,000 word vocabulary, we use vectors of length 100,000. We should be able to give each word a distinct representation using a lot fewer parameters.

We can use models of word meaning for a variety of practical tasks.

For each word, we'd like to learn

Many meanings can be expressed by a word that's more fancy or more plain. E.g. bellicose (fancy) and warlike (plain) mean the same thing. Words can also describe the same property in more or less complimentary terms, e.g. plump (nice) vs. fat (not nice). This leads to jokes about "irregular conjugations" such as

Definition of "bird" from Oxford Living Dictionaries (Oxford University Press)

"A warm-blooded egg-laying vertebrate animal distinguished by the possession of feathers, wings, a beak, and typically by being able to fly."

Back in the Day, people tried to turn such definitions into representations with the look and feel of formal logic:

isa(bird, animal)

AND has(bird, wings)

AND flies(bird)

AND if female(bird), then lays(bird,eggs) ....

Problems:

The above example is not only incompete, but also has a bug: not all birds fly. It also contains very little information on what birds look like or how they act, so not much help for recognition.

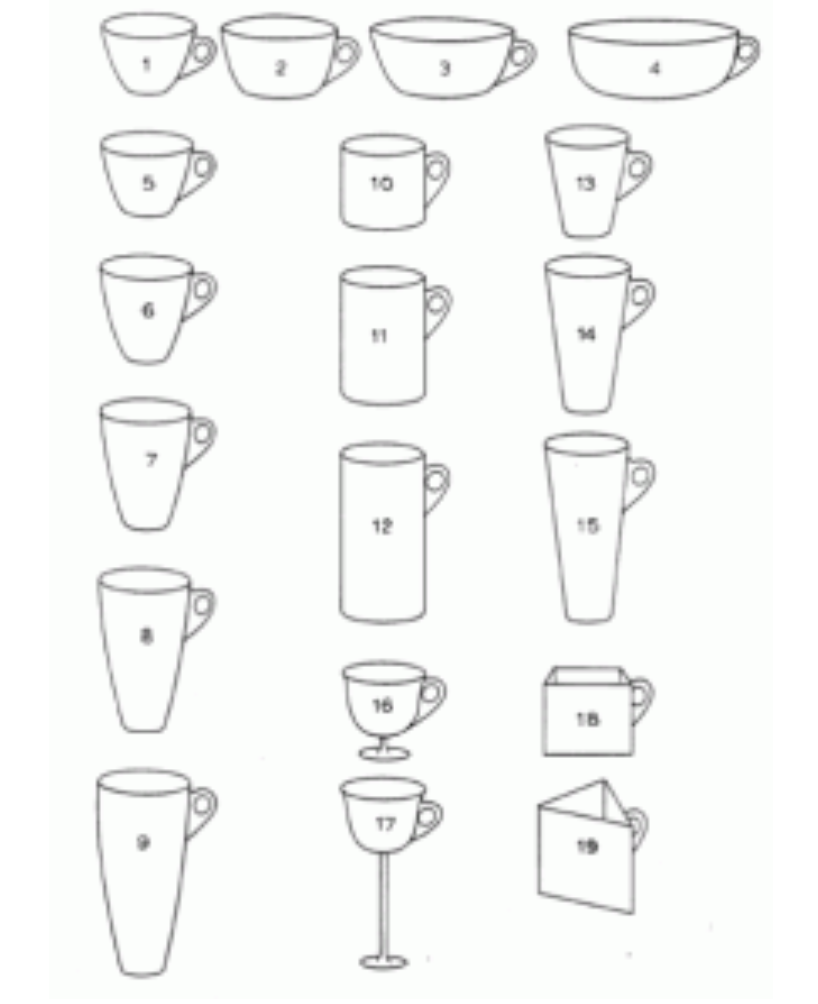

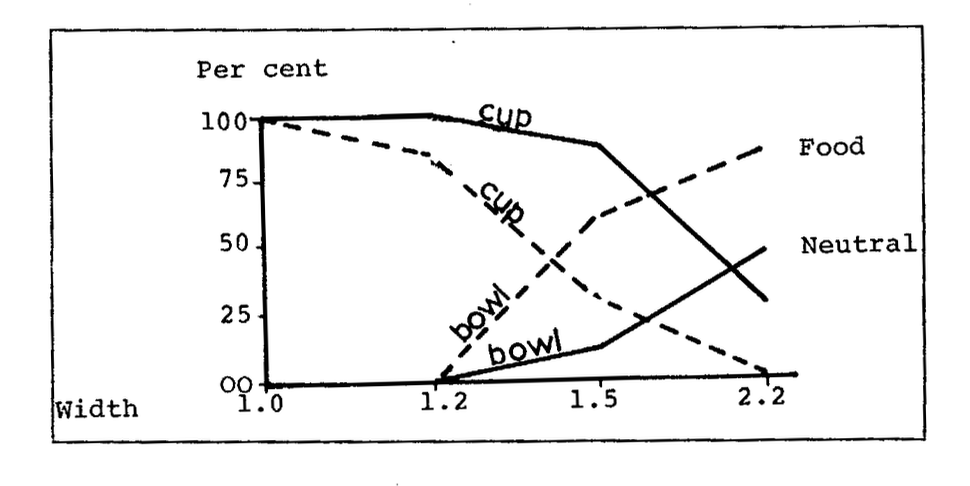

When labelling objects, people use context as well as intrinsic properties. A famous experiment by William Labov (1975, "The boundaries of words and their meanings") showed that the relative probabilities of two labels (e.g. cup vs. bowl) change gradually as properties (e.g. aspect ratio) are varied. Imagining food (e.g. rice) in the container makes the bowl label more likely. Flowers would encourage a container with ambiguous shape to be called a vase. The figures below show some of the pictures used and a graph of how often subjects used "bowl" vs "cup" in neutral and food contexts.

People are also sensitive to the overall structure of the vocabulary. The "Principle of Contrast" states that differences in form imply differences in meaning. (Eve Clark 1987, though idea goes back earlier). For example, kids will say things like "that's not an animal, that's a dog." Adults have a better model that words can refer to more or less general categories of objects, but still make mistakes like "that's not a real number, it's an integer." Apparent synonyms seem to inspire a search for some small difference in meaning. For example, how is "graveyard" different from "cemetery"? Perhaps cemeteries are prettier, or not adjacent to a church, or fancier, or ...

People can also be convinced that two meanings are distinct because an expert says they are, even when they cannot explain how they differ (an observation originally due to Hilary Putnam). For example, pewter (used to make food dishes) and nickel silver (used to make keys for instruments) are similar looking dull silver-colored metals used as replacements for silver. The difference in name tells people that they must be different. But most people would have to trust an expert because they can't tell them apart. Even fewer could tell you that they are alloys with these compositions:

A different approach to representing word meanings, which has recently been working better in practice is to observe how people use each word.

"You shall know a word by the company it keeps" (J. R. Firth, 1957)

People have a lot of information to work with, e.g. what's going on when the word was said, how other people react to it, even actual explanations from other people. Most computer algorithms only get to see running text. So we approximate this idea by observing which words occur in the same textual context. That is words that occur together often share elements of meaning.

Figuring out an unfamiliar word from examples in context:

Authentic biltong is flavored with coriander.

John took biltong on his hike.

Antelope biltong is better than ostrich biltong .

The first context suggests it's food. The second context suggests that it's not perishable. The third suggests it involves meat.

Idea: let's represent each word as a vector of numerical feature values.

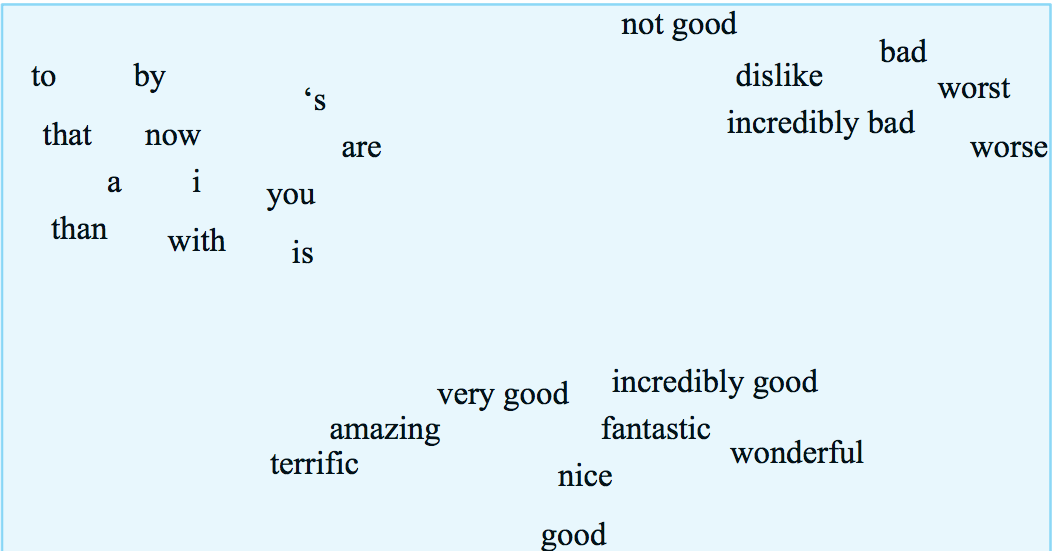

These feature vectors are called word embeddings. In an ideal world, the embeddings might look like this embedding of words into 2D, except that the space has a lot more dimensions. (In practice, a lot of massaging would be required to get a picture this clean.)

from Jurafsky and Martin

from Jurafsky and Martin

Apparently Tesla's software doesn't know much about police, leaving them with an interesting puzzle: how to persuade an automonmous vehicle to pull over.