Boaty McBoatface (from the BBC)

Boaty McBoatface (from the BBC)

Last class, we sketched the process of getting from speech or raw (potentially messy) text input to a clean sequence of words. By "word," I mean a chunk of a size that's convenient for later algorithms (e.g. parsing, translation).

This is often a standard written word. But it may be a merger of standard words if these are inconveniently short (e.g. Chinese) or a partial word in languages (e.g. Turkish, German) whose words are inconveniently long. It might mean a morpheme, i.a. a minimal meaning unit, that those are usually seen as too short.

Models of human language understanding typically assume that a first stage that produces a reasonably accurate sequence of phones. (That's not always true in practice.) The sequence of phones must then be segmented into a sequence of words by a "word segmentation" algorithm. The process might look like this, where # marks a literal pause in speech (e.g. the speaker taking a breath).

INPUT: ohlThikidsinner # ahrpiyp@lThA?HAvkids # ohrThADurHAviynqkids

OUTPUT: ohl Thi kids inner # ahr piyp@l ThA? HAv kids # ohr ThADur HAviynq kids

In standard written English, this would be "all the kids in there # are people that have kids # or that are having kids".

These words then need to be divided into morphemes by a "morphology" algorithm. Words in some languages can get extremely long (e.g. Turkish), making it hard to do further processing unless they are (at least partly) subdivided into morphemes. For example:

unanswerable --> un-answer-able

preconditions --> pre-condition-s

Systems starting from textual input may face a similar segmentation problem. Some writing systems do not put spaces between words.

NLP systems may also have to fuse input units into larger ones. For example, speech reognition systems may be configured to transcribe into a sequence of short words (e.g. "base", "ball", "computer", "science") even when they form a tight meaning unit ("baseball" or "computer science"). This is a particularly important problem for writing systems such as Chinese. Consider this well-known sequence of two characters:

Zhōng + guó

Historically, this is two words ("middle" and "country") and the two characters appear in writing without overt indication that they form a unit. But they are, in fact, a single word meaning China. Grouping input sequences of characters into similar meaning units is an important first step in processing Chinese text.

In the segmentation example above, notice that "in there" has fused together into "inner" with the "th" sound changing to become like the "n" preceding it. This kind of "phonological" sound change makes both speech recognition and word segmentation much harder. In practice, the early stages of speech recognition produce buggy phoneme sequences that must be corrected by later processing.

The combination of signal processing challenges and phonological changes means that, in practice, current speech recognizers cannot actually transcribe speech into a sequence of phones without some broader-scale context. Most recognizers use a simple (ngram) model of words and word to correct the raw phone recognition and, therefore, produce word sequences directly. So the actual sequence of processing stages depends on the application and whether you're talking about production computer systems or models of human language understanding.

In order to group words into larger units (e.g. prepositional phrases), the first step is typically to assign a "part of speech" (POS) tag to each word. Here some sample text from the Brown corpus, which contains very clean written text.

Northern liberals are the chief supporters of civil rights and of integration. They have also led the nation in the direction of a welfare state.

Here's the tagged version. For example, "liberals" is an NNS which is a plural noun. "Northern" is an adjective (JJ). Tag sets need to distinguish major types of words (e.g. nouns vs. adjectives) and major variations e.g. singular vs plural nouns, present vs. past tense verbs. There are also some ad-hoc tags key function words, such as HV for "have," and punctuation (e.g. period).

Northern/jj liberals/nns are/ber the/at chief/jjs supporters/nns of/in civil/jj rights/nns and/cc of/in integration/nn ./. They/ppss have/hv also/rb led/vbn the/at nation/nn in/in the/at direction/nn of/in a/at welfare/nn state/nn ./.

On clean text, a highly tuned POS tagger can get about 97% accuracy. In other words, POS taggers are quite reliable and mostly used as a stable starting place for further analysis.

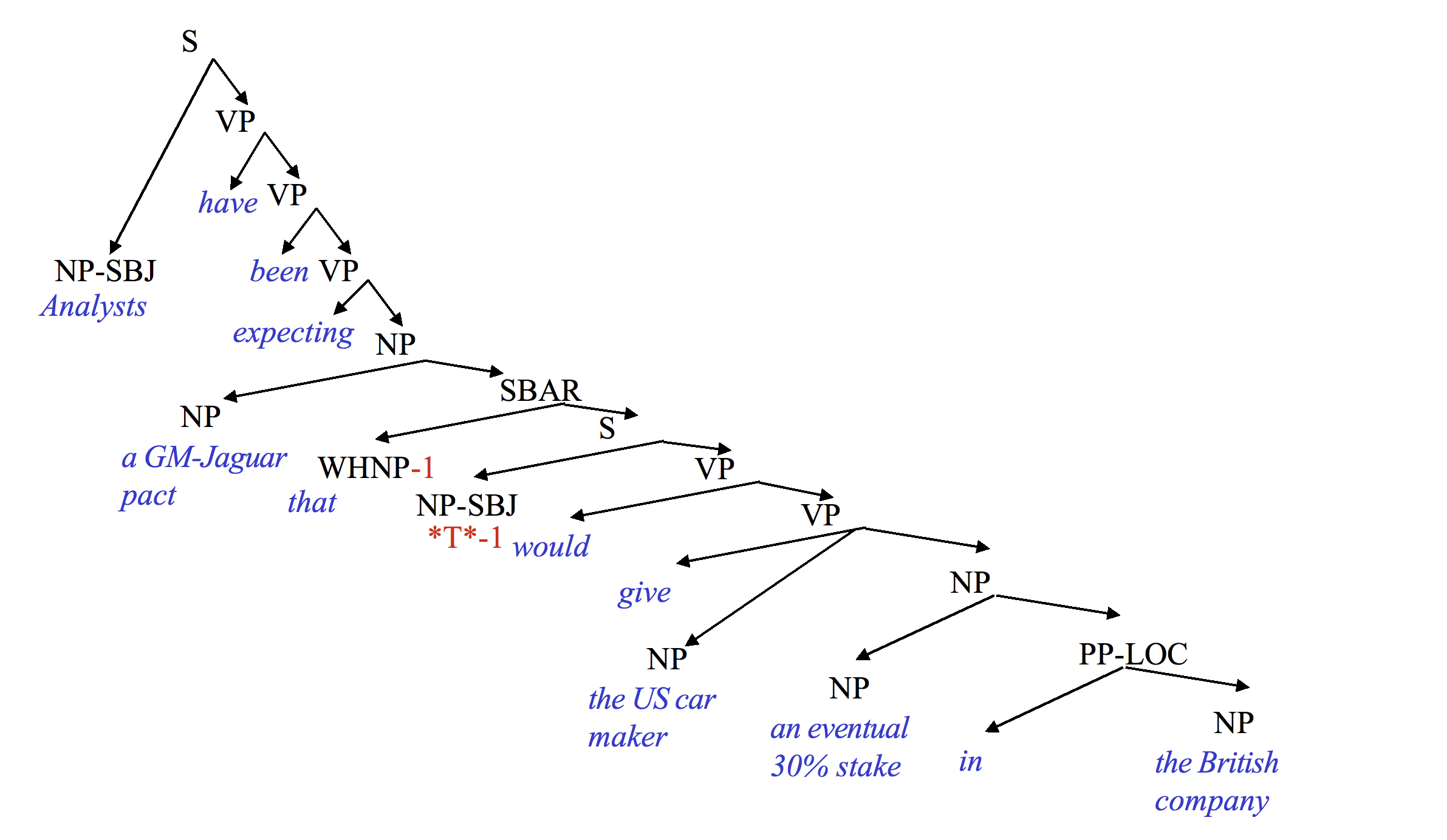

One we have POS tags for the words, we can assemble the words into a parse tree. There's many styles from building parse trees. Here is a constituency tree from the Penn treebank (from Mitch Marcus).

Penn treebank style parse tree (from Mitch Marcus)

Penn treebank style parse tree (from Mitch Marcus)

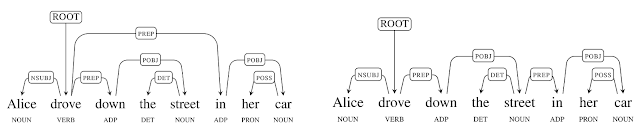

An alternative is a dependency tree like the following ones below from Google labs. A fairly recent parser from them is called "Parsey McParseface," after the UK's Boaty McBoatface shown above.

In this example, the lefthand tree shows the correct attachment for "in her car," i.e. modifying "drove." The righthand tree shows an interpretation in which the street is in the car.

Linguists (computational and otherwise) have extended fights about the best way to draw these trees. However, the information encoded is always fairly similar and primarily involves grouping together words that form coherent phrases e.g. "a welfare state." It's fairly similar to parsing computer programming languages, except that programming languages have been designed to make parsing easy.

Parsers fall into two categories

The value of lexical information is illustrated by sentences like this, in which changing the noun phrase changes what the prepositional phrase modifies:

She was going down a street ..

in her truck. (modifies going)

in her new outfit. (modifies the subject)

in South Chicago. (modifies street)

The best parsers are lexicalized (accuracies up to 94% from Google's "Parsey McParseface" parser). But it's not clear how much information to include about the words and their meanings. For example, should "car" always behaves like "truck"? More detailed information helps make decisions (esp. attachment) but requires more training data.

Notice that the tag set for the Brown corpus was somewhat specialized for English, in which forms of "have" and "to be" play a critical syntactic role. Tag sets for other languages would need some of the same tags (e.g. for nouns) but also categories for types of function words that English doesn't use. For example, a tag set for Chinese or Mayan would need a tag for numeral classifiers, which are words that go with numbers (e.g. "three") to indicate the approximate type of object being enumerated (e.g. "table" might require a classifier for big flat objects). It's not clear whether it's better to have specialized tag sets for specific languages or one universal tag sets that includes major functional categories for all languages.

Tag sets vary in size, depending on the theoretical biases of the folks making the annotated data. Smaller sets of labels convey only basic information about word type. Larger sets include information about what role the word plays in the surrounding context. Sample sizes

Conversational spoken language also includes features not found in written language. In the example below (from the Switchboard corpus), you can see the filled pause "uh", also a broken off word "t-". Also, notice that the first sentence is broken up by a paranthetical comment ("you know") and the third sentence trails off at the end. Such features make spoken conversation harder to parse than written text.

I 'd be very very careful and , uh , you know , checking them out . Uh , our , had t- , place my mother in a nursing home . She had a rather massive stroke about , uh, about ---

I/PRP 'd/MD be/VB very/RB very/RB careful/JJ and/CC ,/, uh/UH ,/, you/PRP know/VBP ,/, checking/VBG them/PRP out/RP ./. Uh/UH ,/, our/PRP$ ,/, had/VBD t-/TO ,/, place/VB my/PRP$ mother/NN in/IN a/DT nursing/NN home/NN ./. She/PRP had/VBD a/DT rather/RB massive/JJ stroke/NN about/RB ,/, uh/UH ,/, about/RB --/:

Another low-level application is translation. To learn how to do translation, we might align pairs of sentences in different languages, matching corresponding words.

English: 18-year-olds can't buy alcohol.

French: Les 18 ans ne peuvent pas acheter d'alcool

| 18 | year | olds | can | 't | buy | alcohol | |||

| Les | 18 | ans | ne | peuvent | pas | acheter | d' | alcool |

Notice that some words don't have matches in the other language. For other pairs of languages, there could be radical changes in word order.

A corpus of matched sentence pairs can be used to build translation dictionaries (for phrases as well as words) and extract general knowledge about changes in word order.

Most of the tasts we've seen so far use simple sequence models of their input, making the assumption that only the last few items matter to the next decision (a "Markov assumption").

Specific methods include finite-state automata, hidden Markov models (HMMs), and recurrent neural nets (RNNs).

Parsing algorithms have a similar structure, but their prior context includes whole chunks of the tree. For example, "the young lady" might be considered a single unit. Parsing algortihms typically have a lot more options to consider, i.e. a lot of partly-built trees. This forces them to use beam search, i.e. keeping a fixed number of hypotheses with the best rating. Newer methods also try to share tree structure among competing alternatives (like dynamic programming) so they can store more hypotheses and avoid duplicative work.

Representation of meaning is less well understood, so many working methods use shallow representions based on the bag of words model (see our discussion of naive Bayes) or local groupings of words (e.g. noun phrases). Applications that can work well with limited understanding include

Very few systems attempt to understand sophisticated constructions involving quantifiers ("How many arrows didn't hit the target?") or relative clauses (see the Penn treebank parse example above). Negation is more difficult than it looks. For example, a google query for "Africa, not francophone" returns information on French-speaking parts of Africa. And this circular translation example shows Google converting "oops on the X" to "try X," i.e. reversing the polarity of the advice.

Three types of shallow semantic analysis have proved useful and almost within current capabilities:

Semantic role labelling involves deciding how the main noun phrases in a clause relate to the verb. For example, in "John drove the car," "John" is the subject/agent and "the car" is the object being driven. These relationships aren't always object, e.g. who is eating who in a "truck eating bridge"? (Google it.)

Right now, the most popular representation for word classes is a "word embedding." Word embeddings give each word a unique location in a high dimensional Euclidean space, set up so that similar words are close together. We'll see the details later. A popular algorithm is word2vec.

Running text contains a number of "named entities," i.e. nouns, pronouns, and noun phrases referring to people, organizations, and places. Co-reference resolution tries to identify which named entities refer to the same thing. For example, in this text from Wikipedia, we have identified three entities as referring to Michelle Obama, two as Barack Obama, and three as places that are neither of them. One source of difficulty is items such as the last "Obama," which looks superficially like it could be either of them.

[Michelle LaVaughn Robinson Obama] (born January 17, 1964) is an American lawyer, university administrator, and writer who served as the [First Lady of the United States] from 2009 to 2017. She is married to the [44th U.S. President], [Barack Obama], and was the first African-American First Lady. Raised on the South Side of [Chicago, Illinois], [Obama] is a graduate of [Princeton University] and [Harvard Law School].

Successes and faceplants from experimental chatbots.