Natural language and speech processing has three main goals:

Generation is somewhat easier than understanding, but still hard to do well.

A "shallow" system converts fairly directly between its input and output, e.g. a translation system that transforms phrases into new phrases without much understanding of what the input means. A "deep" system uses a high-level representation (e.g. like what we saw in classical planning) as an intermediate step between its input and output. There is no hard-and-fast boundary between the two types of design. Both approaches can be useful in an appropriate context.

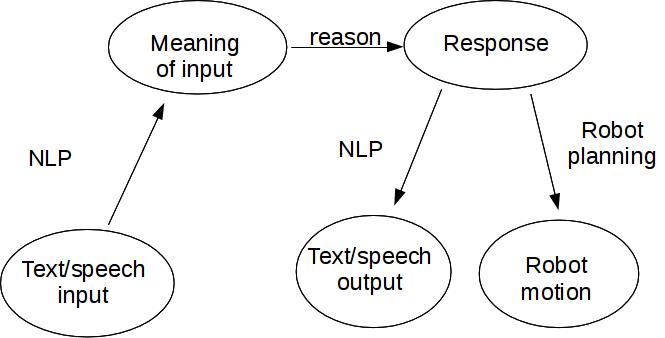

A deep system

A deep system

Before we dive into specific natural language tasks, let's look at some end-to-end systems that have been constructed. These work with varying degrees of generality and robustness.

Early systems Interactive systems have been around since the early days of AI and computer games. For example:

These older systems used simplified text input. The computer's output seems to be sophisticated, but actually involves a limited set of generation rules stringing together canned text. Most of the sophistication is in the backend world model. This enables the systems to carry on a coherent dialog.

These systems also depend on social engineering: teaching the user what they can and cannot understand. People are very good at adapting to a system with limited, but consistent, linguistic skills.

Speech or natural language based HCI For example, speech-based airline reservations (a task that was popular historically in AI) or utility company help lines. Google had a recent demo of a computer assistant making a restaurant by phone. There have been demo systems for tutoring, e.g. physics.

Many of these systems depend on the fact that people will adapt their language when they know they are talking to a computer. It's often critical that the computer's responses not sound too sophisticated, so that the human doesn't over-estimate its capabilities. (This may have happened to you when attempting a foreign language, if you are good at mimicking pronunciation.) These systems often steer humans towards using certain words. E.g. when the computer suggests several alternatives ("Do you want to report an outage? pay a bill?") the human is likely to use similar words in their reponse.

Dialog coherency that is still a major challenge for current systems. For example, you'll get a sensible answer if you ask Google home a question like "How many calories in a banana?" However, it can't understand the follow-on "How about an orange?" because it processes each query separately.

If the AI system is capable of relatively fluent output (possible in a limited domain), the user may mistakenly assume it understands more than it does. Many of us have had this problem when travelling in a foreign country: a simple question pronounced will may be answered with a flood of fluent language that can't be understood. Designers of AI systems often include cues to make sure humans understand that they're dealing with a computer, so that they will make appropriate allowances for what it's capable of doing.

Answering questions, providing information For example, "How do I make zucchini kimchi?" "Are tomatoes fruits?" Normally (e.g. google search) this uses very shallow processing: find documents that seem to be relevant and show extracts to the user. These tools work well primarily because of the massive amount of data they have scraped off the web. Deeper processing has usually been limited to focused domains, e.g. google's ability to summarize information for a business (address, opening hours, etc).

It's harder than you think to model human question answering, i.e. understand the meaning of the question, find the answer in your memorized knowledgebase, and then synthesize the answer text from a representation of its meaning. This is mostly confined to pilot systems, e.g. the ILEX system for providing information about museum exhibits. ( original paper link)

Transcription/captioning If you saw the live transcripts of Obama's 2018 speech at UIUC (probably a human/computer hybrid transcription), you'll know that there is still a significant error rate. Highly-accurate systems (e.g. dictation) depend on knowing a lot about the speaker (how they normally pronounce words) and/or their acoustic environment (e.g. background noise) and/or what they will be talking about (e.g. phone help lines).

Evaluating/correcting Everyone uses spelling correction (largely a solved problem). Useful upgrades (which often work) include detection of grammar errors, adding vowels or diacritics in language (e.g. Arabic) where they are often omitted. Cutting-edge research problems involve automatic scoring of essay answers on standardized tests. Speech recognition has been used for evaluating English fluency and children learning to read (e.g. aloud). Success depends on very strong expectations about what the person will say.

Translation The input/output is usually text, but occasionally speech. Methods are often quite shallow, with just enough deep processing to handle changes in word order between languages.

Google translate is impressive, but can also fail catastrophically. A classic test is a circular translation: X to Y and then back to X. Google translate can still be made to fail. We pick somewhat uncommon topics (making kimchi, Brexit) and then

A serious issue with current AI systems is that they have no idea when they are confused. A human is more likely to understand that they don't know the answer, and say so rather than bluffing.

Summarizing information Combining and selecting text from several stories, to produce a single short abstract. The user may read just the abstract, or decide to drill down and read some/all of the full stories. Google news is a good example of a robust summarization system. These systems make effective use of shallow methods, depending on the human to finish the job of understanding.

We can divide natural language processing into two major areas

Some couple useful definitions

Text input: two cases

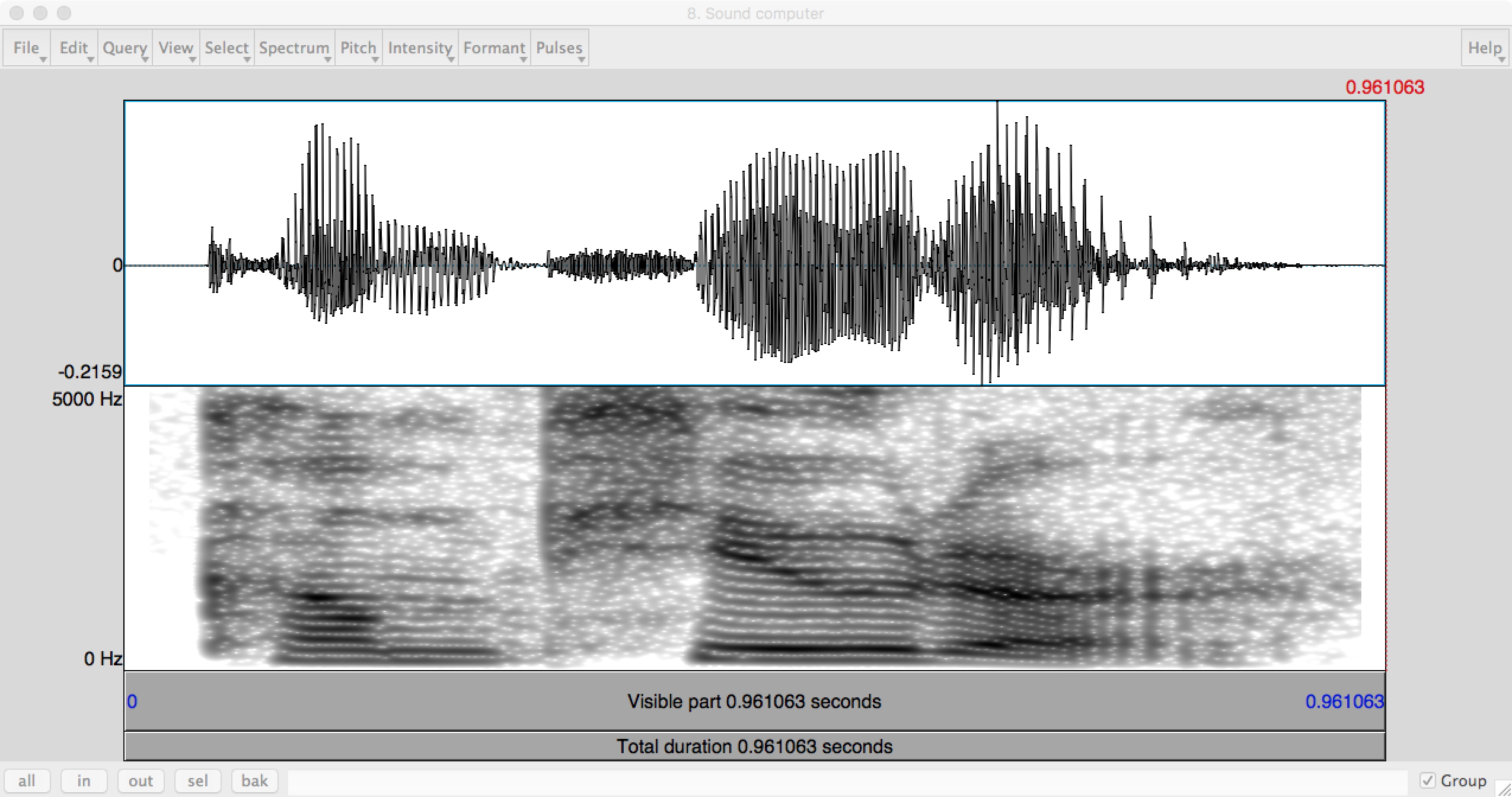

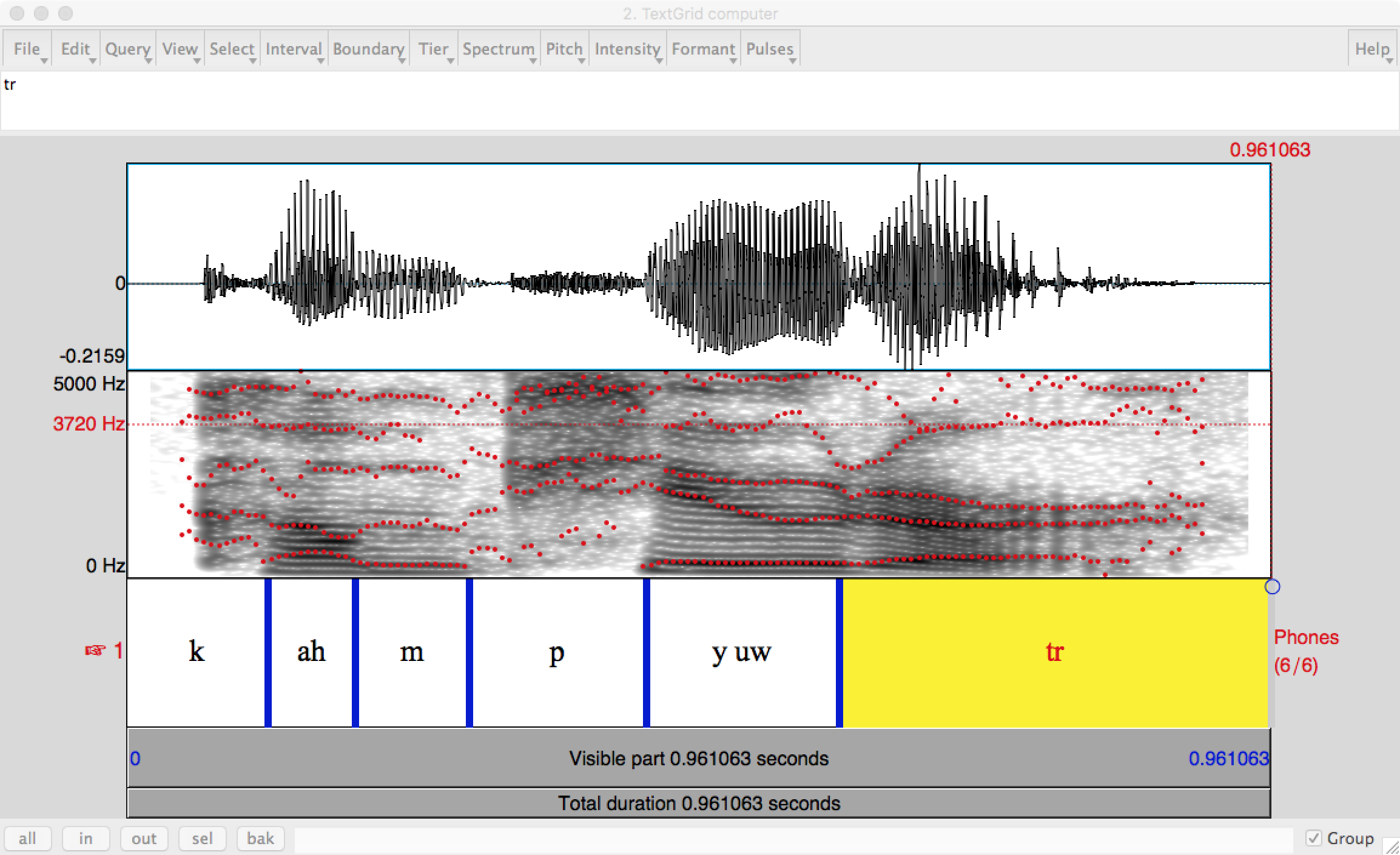

The first "speech recognition" layer of processing turns speech (e.g. the waveform at the top of this page) into a sequence of phones (basic units of sound). We first process the waveform to bring out features important for recognition. The spectogram (bottom part of the figure) shows the energy at each frequency as a function of time, creating a human-friendly picture of the signal. Computer programs use something similar, but further processed into a small set of numerical features.

Each phone shows up in characteristic ways in the spectrogram. Stop consonants (t, p, ...) create a brief silence. Fricatives (e.g. s) have high-frequency random noise. Vowels and semi-vowels (e.g. n, r) have strong bands ("formants") at a small set of frequencies. The location of these bands and their changes over time tell you which vowel or semi-vowel it is.

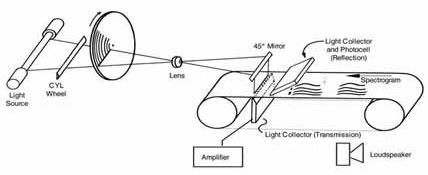

Recognition is harder than generation. However, generation is not as easy as you might think. Very early speech synthesis (1940's, Haskins Lab) was done by painting pictures of formants onto a sheet of plastic and using a physical transducer called the Pattern Playback to convert them into sounds.

Synthesis moved to computers once these became usable. One technique (formant synthesis) reconstructs sounds from formats and/or a model of the vocal tract. Another technique (concatenative synthesis) splices together short samples of actual speech, to form extended utterances.